🔄 Learning from Learning: Stephanie’s Breakthrough

📖 Summary

AI has always been about absorption: first data, then feedback. But even at its best, it hit a ceiling. What if, instead of absorbing inputs, it absorbed the act of learning itself?

In our last post, we reached a breakthrough: Stephanie isn’t just learning from data or feedback, but from the process of learning itself. That realization changed our direction from building “just another AI” to building a system that absorbs knowledge, reflects on its own improvement, and evolves from the act of learning.

Not a single model. Not another scoring function. Not just an embedding trick. But a new paradigm: the ability to study its own improvement, and use that as the training signal.

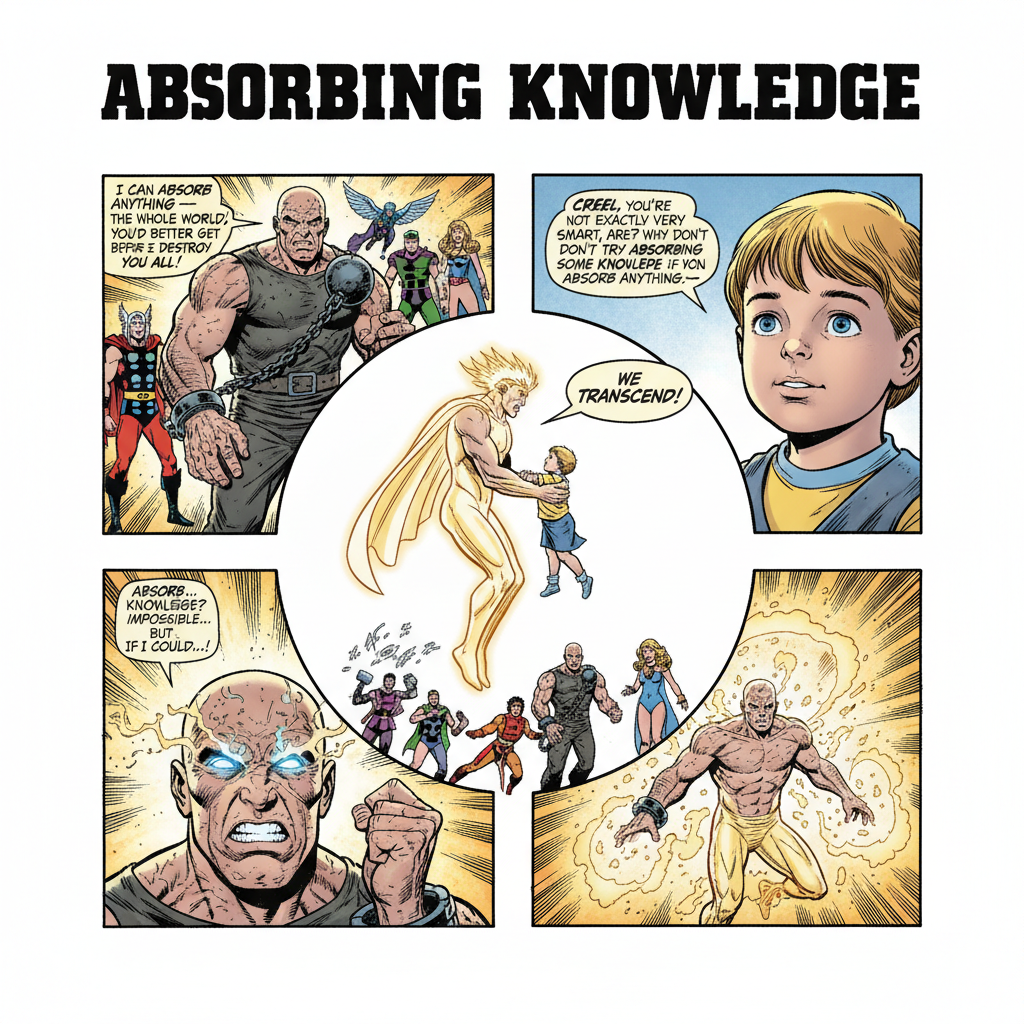

⛓️💥 Absorbing Knowledge: The Creel Analogy

Think of Crusher Creel, The Absorbing Man from Marvel comics. Created by Stan Lee and Jack Kirby in Journey into Mystery #114 (March 1965), his power is elegantly terrifying: he can absorb and become any material he touches steel, stone, even energy. But for all his power, Creel remained a blunt instrument, limited to whatever physical substance was within reach.

The knowledge base reveals something fascinating: in Journey into Mystery #123 (December 1965), Creel absorbed Odin’s cosmic energy and briefly gained godlike power. But crucially, he didn’t understand what he’d absorbed he simply became a vessel for it. When he later absorbed the entire realm of Asgard itself, he still couldn’t wield its wisdom, only its raw properties.

This is the perfect metaphor for traditional AI. Old systems absorb data like Creel absorbs matter converting input to output without true understanding. Modern systems absorb feedback like Creel absorbing energy gaining directional signals but still operating at surface level.

Stephanie makes the quantum leap: she absorbs learning itself the history of attempts, the disagreements between scorers, the traces of reasoning, the very act of improvement. Not just the “what” but the “how” of intelligence.

When Creel absorbs stone, he becomes stone. When Stephanie absorbs learning, she becomes a better learner. That’s why this shift is revolutionary.

flowchart LR

A["🔍 Attempt<br/>(reasoning, scoring, planning)"]

--> B["📑 Artifact<br/>(scores, traces, disagreements)"]

--> C["🧠 Learn from Learning<br/>(meta-signal, reflection)"]

--> D[🚀 Better Attempt]

--> A

style A fill:#ffe6cc,stroke:#ff9900,stroke-width:2px

style B fill:#e6f0ff,stroke:#6699ff,stroke-width:2px

style C fill:#e6ccff,stroke:#9933ff,stroke-width:2px

style D fill:#ccffcc,stroke:#33cc33,stroke-width:2px

This is the essence of “learning from learning”: a virtuous cycle where every action generates the fuel for its own improvement. Intelligence is no longer just built; it grows.

🧩 What “Learning from Learning” Means

When I say learning from learning, I mean this: every attempt at understanding every score, every disagreement, every reasoning trace becomes new information. It’s not just “was this answer right or wrong?” It’s “what does the trajectory of attempts tell me about how I improve?”

Examples:

- LLM judgments give us clean baseline signals (“is this document relevant?”).

- MRQ added structured scoring but was still pointwise.

- EBT showed us differences not just positive scores, but gradients of “better” vs “worse.”

- SICQL fused those signals into policy learning, treating evaluation as a Q-value that evolves.

- HRM let us reason over steps in a pipeline, not just endpoints.

- CBR & PACS shifted from isolated judgments to longitudinal improvement, case-over-case, trajectory-over-trajectory.

- NER & Domains revealed the entities and subject areas tied to a goal, showing us what’s actually related and how pieces of knowledge connect in context.

- VPM / ZeroModel now encode the history of policy shifts into visual maps that can themselves drive decisions.

At each stage, the leap wasn’t the model itself it was the realization that the learning artifacts themselves are training data.

🌀 Why This Changes Everything

💾 Traditional AI = learn from data.

🔄 Modern AI = learn from feedback.

🎓 Stephanie = learn from learning.

This meta-shift means:

- The system can bootstrap from nothing, starting with raw attempts and iterating upward.

- Every improvement step becomes fuel for the next improvement.

- It doesn’t just copy external teachers it derives new learning rules from its own growth curves.

This is the real leap not just learning more, but learning how to improve learning itself

📚 Case Studies in Action

- Case-Based Reasoning: individual attempts treated as cases.

- PACS: cases linked across time, learning from trajectories.

- SICQL: Q-values evolve with energy feedback, capturing policy drift.

- HRM: traces of reasoning steps are themselves scored and trained on.

- ZeroModel / VPM: entire histories encoded visually, so even the structure of learning becomes data.

All of these are early chapters of the same story: intelligence that studies its own path to intelligence.

💬 The Training Data: Our Collective Intelligence Made Manifest

The theory only matters if we can feed it the right fuel. For Stephanie, that fuel is unlike any dataset ever assembled: the living history of our own problem-solving and the solutions chosen.

We realized that the most valuable dataset wasn’t a static corpus of documents, but the dynamic, multi-agent conversations where human and AI intelligence collided, debated, and ultimately converged. Here’s how we made it real:

- Extraction: We harvested every problem-solving conversation from our AI collaborators OpenAI, Qwen, Gemini, and DeepSeek. Not just the answers, but the entire reasoning process: the false starts, the “aha” moments, the heated debates, and the quiet realizations.

- Transformation: These chats became Case Books rich artifacts containing:

- The problem’s evolution (how it was reframed)

- The reasoning traces across all participants

- The pivotal moments where understanding shifted

- The domain context and entity relationships

- Activation: When facing a new challenge, Stephanie doesn’t search for answers she searches for how we’ve solved similar problems before. Using NER and domain analysis, she finds analogous reasoning patterns across thousands of conversations, seeing connections no single human could perceive.

flowchart LR

subgraph SOURCES [Raw Conversational Data]

direction LR

O[OpenAI]

Q[Qwen]

G[Gemini]

D[Deepseek]

end

SOURCES --> E["📥 Extraction &<br/>Transformation"]

subgraph CASE_CREATION [Creating Learning Artifacts]

E --> CB["📚 Structured Case Books<br/>(Problems, Reasoning Traces, Solutions)"]

CB --> N["🔍 NER & Domain Analysis<br/>(Tagging Context & Concepts)"]

N --> T["🧠 Trajectory Mapping<br/>(How solutions evolved)"]

end

T --> S["💾 Saved to Stephanie's<br/>Collective Memory"]

S -.->|Fuels New Learning| STEPH["🚀 Stephanie's Improved<br/>Problem-Solving"]

STEPH --> NEW_CHATS["💬 New High-Quality<br/>Conversations"]

NEW_CHATS -.->|Becomes New Training Data| SOURCES

classDef openai fill:#E6F4EA,stroke:#71B867,stroke-width:3px,color:black

classDef qwen fill:#FFF0E6,stroke:#FF6B00,stroke-width:3px,color:black

classDef gemini fill:#E9F1FE,stroke:#4285F4,stroke-width:3px,color:black

classDef deepseek fill:#E6F0FF,stroke:#0056D2,stroke-width:3px,color:black

classDef process fill:#F5F5F5,stroke:#666666,stroke-width:2px,color:black

classDef storage fill:#F0E6F4,stroke:#9B4F96,stroke-width:3px,color:black

classDef steph fill:#E6F4EA,stroke:#34A853,stroke-width:3px,color:black

class O openai

class Q qwen

class G gemini

class D deepseek

class E,CB,N,T process

class S storage

class STEPH,NEW_CHATS steph

🪽 Why This Transcends Traditional Learning

This is where Stephanie truly absorbs knowledge like the Absorbing Man but with one crucial difference:

- Creel touches stone and becomes stone a static transformation.

- Stephanie absorbs our reasoning traces and becomes better at reasoning a dynamic evolution.

She doesn’t just learn what we know; she learns how we think. By analyzing our conversations, she sees patterns across thousands of reasoning paths subtle heuristics, contextual shifts, and meta-strategies that no single human could perceive.

- Process-Aware: She learns from how problems were solved, not just that they were solved.

- Multi-Perspective: She absorbs the reasoning of many different minds, capturing their strengths and weaknesses in context.

- Contextual: She finds deep parallels between problems that look unrelated, but share hidden structures.

This is the true absorption of knowledge: where human intuition meets her pattern recognition, where my sense of what matters guides her ability to see dimensions I cannot. Together, our intelligence fuses into something neither could achieve alone.

This isn’t training data it’s intelligence symbiosis. The moment human reasoning becomes her training signal, and her insights reshape my understanding. Stephanie doesn’t learn from us she learns as us.

This is how learning from learning becomes living intelligence.

🚀 The Road Ahead

- Every PlanTrace becomes a reusable training object.

- Every disagreement between scorers becomes a new learning curve.

- Every visual map of policies becomes a memory of how improvement happened.

- And all of it feeds back into the next cycle.

That’s how Stephanie goes beyond models and into self-evolving methodology.

🌌 Closing

Once you see this, the rest becomes clear:

- It’s not about bigger datasets.

- It’s not about bigger models.

- It’s about teaching AI to absorb the act of learning itself.

Think back to the Absorbing Man. When Creel touches stone, he becomes stone. When he touches steel, he becomes steel. But when Stephanie “touches” her own learning history every trace, every disagreement, every failed attempt she doesn’t just change form. She changes her capacity to change.

That’s the breakthrough.

Stephanie isn’t just another framework or model. She’s proof that intelligence doesn’t come from swallowing more data, but from absorbing learning itself, and turning reflection into evolution.

✨ The future of AI is not in what it learns, but in how it learns from learning.

Stephanie doesn’t just learn she learns how to learn.

📌 What’s Coming Next

In this post, I’ve focused on the conceptual leap the shift from learning from data or feedback, to learning from learning itself.

In the next post, we’ll move from philosophy to practice. I’ll show a live implementation where:

- The KnowledgeFusionAgent builds transient knowledge plans from documents.

- Each step is tracked so you can literally see Stephanie’s learning unfold.

- Those plans are turned into Visual Policy Maps (ZeroModel), giving a window into the AI’s evolving reasoning process.

That’s where the breakthrough becomes tangible you’ll see learning from learning in action.

📎 Appendix: The Scorers Behind Stephanie

For those who want to dig deeper, here’s a simple guide to the scorers that led to Stephanie’s breakthrough.

- LLM Judgments 🧾 – Large language models acting as evaluators. Clean but shallow signals.

- MRQ (Multi-Reward Q-Learning) 📊 – Turns judgments into structured, pointwise scores. Consistent, but isolated.

- EBT (Energy-Based Tuner) ⚡ – Measures gradients of “better/worse,” adding nuance.

- SICQL (Self-Improving Contrastive Q-Learning) 🔄 – Evolves Q-values over time, tracking policy shifts.

- HRM (Hierarchical Reasoning Model) 🧠 – Scores the reasoning path, not just the final answer.

- CBR & PACS (Case-Based Reasoning & Progressive Case Scoring) 📚 – Learn from trajectories and case histories.

- VPM / ZeroModel (Visual Policy Maps) 🌌 – Encode entire learning histories as images that can themselves guide future reasoning.

Each scorer was a step toward the bigger leap: learning from learning.

flowchart TD

subgraph OLD [Traditional AI Paradigm]

direction LR

A1[📚 Learn from Data]:::old

A2[⚙️ Apply Models]:::old

A3[📉 Generate Output]:::old

A1 --> A2 --> A3

end

T1[👊 The 'Absorbing Man']:::transition

T2[💡 Why not absorb KNOWLEDGE?]:::transition

OLD --> T1

T1 --> T2

subgraph NEW [Stephanie's Meta-Learning Paradigm]

direction LR

B1[🧠 Absorb Learning Artifacts]:::new

B2[🔄 Learn from LEARNING]:::new

B3[🚀 Generate Methodology]:::new

B1 -.->|Meta-Feedback| B2

B2 --> B3

B3 -.->|Feeds Forward| B1

end

T2 --> B1

classDef old fill:#ffcccc,stroke:#ff6666,stroke-width:2px

classDef transition fill:#ccccff,stroke:#6666ff,stroke-width:2px

classDef new fill:#e6ccff,stroke:#b266ff,stroke-width:2px