The Nexus Blossom: How AI Thoughts Turn into Habits

📓 Summary

Turning raw text into a living graph of thought and proving it works by watching it think.

tl;dr. We built a minimal viable thinking loop:

- Convert any input (chat, docs, code) into a Scorable – the fundamental unit of thought, enriched with goal, domains, entities, and embeddings.

- Blossom the idea: generate a small forest of candidate continuations around each Scorable, sharpen them, and score them across multiple dimensions (alignment, faithfulness, clarity, coverage, etc.).

- Weave the best candidates into Nexus, a persistent thought graph where repeated successful paths are reinforced into cognitive “habits” and weaker branches quietly die away.

- Move everything over a ZeroMQ Event / Knowledge Bus (plus a shared cache), so agents publish/subscribe to thought events asynchronously instead of marching through a single, brittle pipeline.

- Mirror each run into Visual Thought Maps (VPM tiles and garden filmstrips) and run A/B experiments (random baselines vs Nexus+Blossom) so we can see and measure improvements in reasoning, not just in final answers.

This isn’t a pretty graph of data; it’s a picture of thinking itself.

The rest of the post shows how these pieces Scorables, Blossom, Nexus, the event bus, and VPMs combine into a system that doesn’t just process information once, but practices and improves its own reasoning over time.

💭 Thoughts That Grow: Building a Network That Forms Habits

Think about how you learn your way around a city.

At first, every journey needs effort: you check maps, read street names, maybe get lost once or twice. Over time, certain routes become familiar. You stop thinking about every turn; you just go. Your brain has turned a messy search over many possibilities into a few trusted paths that feel like second nature.

That’s exactly what we’re trying to build in software.

Inside Stephanie, the “city” is a graph of thoughts. Places you can go are nodes (Scorables, ideas, partial answers). The ways to get there are edges (reasoning steps, transformations, checks). Each time the system solves a problem, it’s effectively walking a route through that city. When a route works well, we don’t want to throw it away we want to reinforce it, remember it, and make it easier to reuse next time.

Our job is to:

- Find good routes through the space of ideas (Blossom exploring alternatives),

- Strengthen the ones that reliably lead to good outcomes (Nexus reinforcing edges),

- And, most importantly, apply those routes when new problems show up that “live in the same part of the city”.

In other words, we’re not just drawing a map of where the model has been. We’re teaching it which paths are worth turning into mental motorways and giving it a way to take those faster roads on purpose.

Thesis. We’re building a system that treats thinking as a growing network of thoughts. When one thought reliably leads to another, those paths strengthen; when we revisit the same routes, habits emerge. Our goal is to make those dynamics explicit and operational so the system can improve its reasoning the way a brain does: by activating, reinforcing, and rewiring useful patterns.

Why this matters. Great answers aren’t one-shot. They emerge from micro-steps recall, transform, compare, verify played over a graph of related ideas. If we can see and score those steps, we can promote the best subgraphs into habits. Over time, the system spends less energy searching and more time compounding what it already knows works.

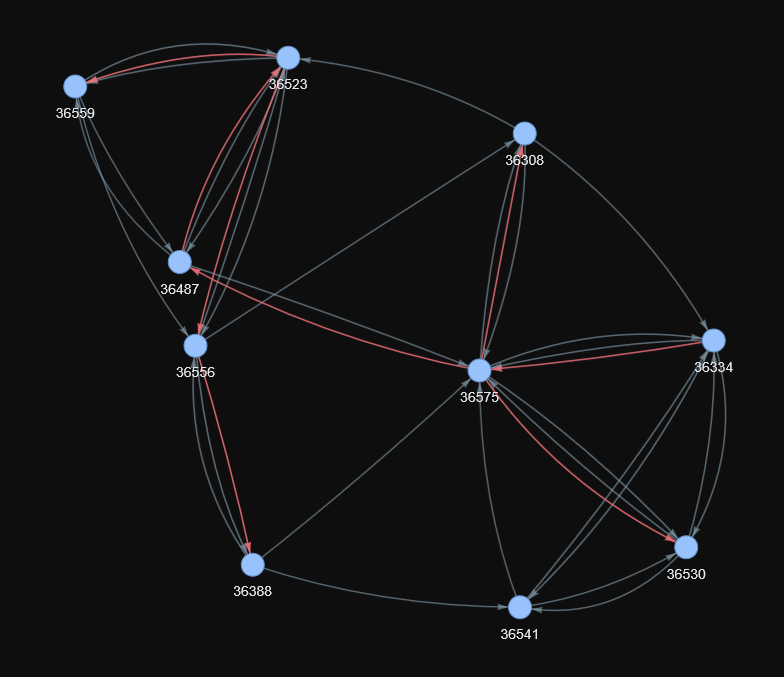

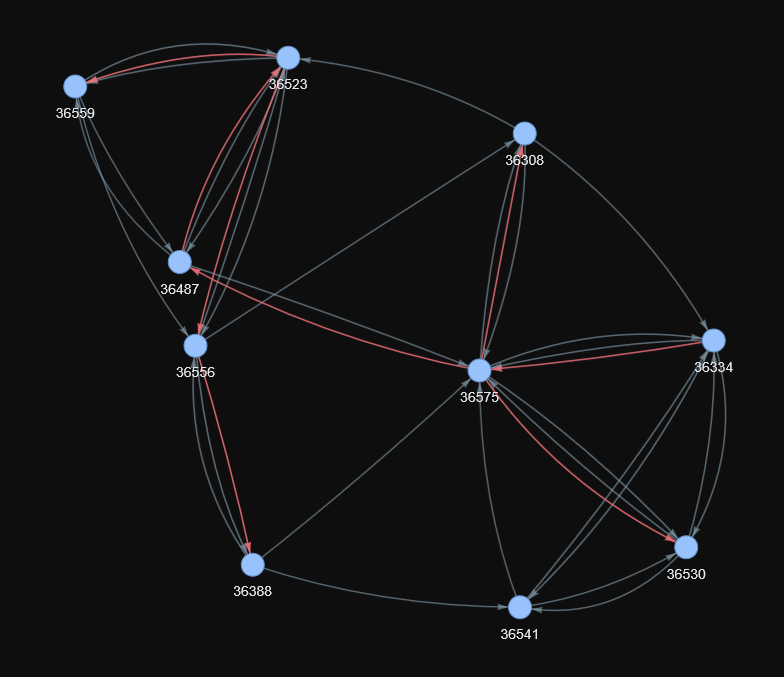

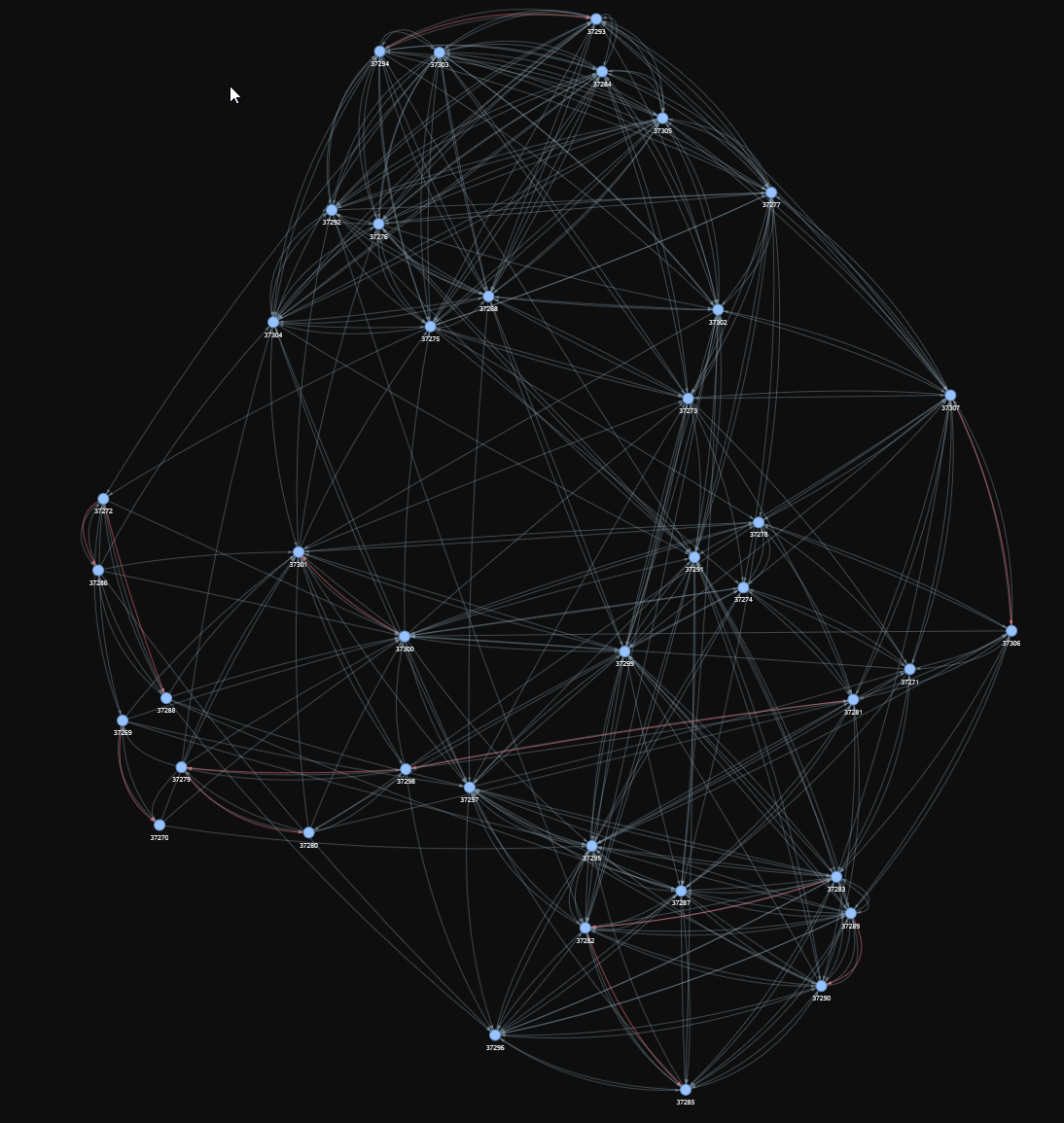

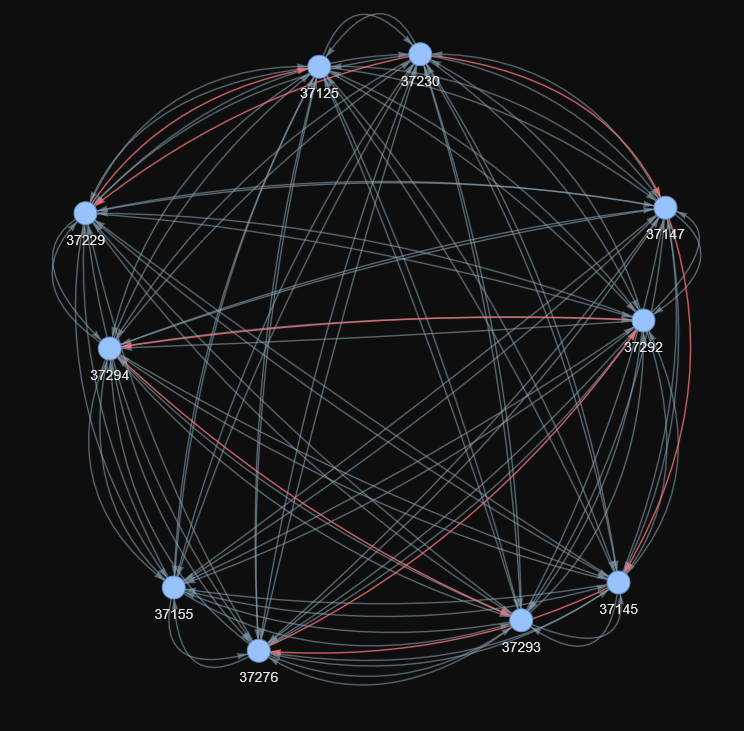

Graph legend. Each blue dot is a Scorable (a document / thought). Grey arrows show all the local k-nearest-neighbour similarity links; red arrows highlight the backbone edges (a minimum-spanning “spine” through the cluster), i.e. the core routes Stephanie prefers when navigating this neighbourhood.

🔨 What we’re building

This section gives the minimum mental model and interfaces so readers know what “Nexus,” “Blossom,” “Scorable,” and the Scorable Processor are and how they click together.

🎥 The Cast: Stephanie’s Cognitive Architecture

-

🕸️ Nexus (Thought Graph)

Stephanie’s long-term memory. Stores every thought as a node and every connection as an edge. Records exploration and reinforces successful paths – turning good thinking into habits. -

🌸 Blossom (Grow & Choose Engine)

The creative explorer. Takes a parent thought and generates K candidates, sharpens them, scores them, and returns the best paths forward, along with a reasoning trace. Emits garden events so you can watch ideas bloom. -

⚡ Event Bus & Knowledge Bus (Cognitive Nervous System)

The wiring between everything. Routes thought impulses between processors, Blossom workers, Nexus, scorers, and VPM. Makes cognition asynchronous and decoupled: thoughts become messages on subjects, and many listeners can react in parallel. -

🎯 Scorable (Atomic Thought Unit)

The smallest piece of thinking we can evaluate – a document chunk, snippet, plan step, or answer. Has a stable ID, text/payload, type, and rich attributes so scoring and Nexus stay consistent. -

🏭 Scorable Processor (Cognitive Factory)

The universal adapter. Normalizes heterogeneous inputs into Scorables, attaches goals/context, extracts features (domains, entities, embeddings), and guarantees stable IDs for downstream scoring and graph updates. -

📊 Scoring (Multi-Dimensional Judges)

The quality layer. Scores each Scorable across dimensions like alignment, faithfulness, coverage, clarity, coherence, then fuses them into an overall signal. Drives both selection (who wins) and reinforcement (what gets promoted). -

🎨 Visual Thought Maps (VPMs & Garden Viewer)

The window into Stephanie’s mind. Turn Nexus + Blossom activity into images and filmstrips, so you can literally see thoughts branching, candidates competing, and habits forming as paths are reinforced.

✨ How the Ensemble Works Together

This stack forms a closed loop of improvement:

- 🎯 Thoughts enter as Scorables.

- 🌸 Blossom explores multiple continuations around each one.

- 📊 Scoring evaluates candidates across dimensions.

- 🕸️ Nexus reinforces the winners and updates the graph.

- 🎨 VPMs make progress visible as graphs and filmstrips.

- ⚡ The Bus keeps everything flowing, carrying events between all of the above.

The result is a system that doesn’t just process information once – it practices its own thinking, turning repeated good patterns into durable cognitive habits.

🧳 A Single Thought’s Journey

Now that we’ve met the cast, here’s how a single pass through the system actually looks.

A goal and some raw input are turned into a Scorable, expanded by Blossom, scored and sharpened, written into Nexus as updated graph structure, and finally rendered as visual thought maps. All of this is glued together by the Event / Knowledge Bus, which carries events between agents so the whole loop stays asynchronous and decoupled.

flowchart LR

%% ===== STYLE DEFINITIONS =====

classDef input fill:#8A2BE2,stroke:#6A0DAD,stroke-width:3px,color:white

classDef processor fill:#4169E1,stroke:#2F4FDD,stroke-width:2px,color:white

classDef scorable fill:#00BFFF,stroke:#0099CC,stroke-width:2px,color:white

classDef bus fill:#FFD700,stroke:#FFA500,stroke-width:3px,color:black

classDef blossom fill:#FF69B4,stroke:#FF1493,stroke-width:2px,color:white

classDef scoring fill:#32CD32,stroke:#228B22,stroke-width:2px,color:white

classDef memory fill:#9D67AE,stroke:#7D4A8E,stroke-width:2px,color:white

classDef visualization fill:#FF6347,stroke:#DC143C,stroke-width:2px,color:white

%% ===== INPUT & SCORABLE LAYER =====

subgraph IN["🌐 Input & Scorable Layer"]

G["🎯 Goal Text"]

R["📥 Raw Input<br/>📄 text / 💻 code / 📚 doc"]

SP["🏭 Scorable Processor"]

SCOR["🎯 Scorable<br/>✨ Seed Thought"]

end

%% ===== EVENT BUS =====

subgraph BUS["⚡ Event & Knowledge Bus"]

direction TB

EB["🛰️ Event Bus<br/>📨 pub/sub + 🔄 request/reply<br/>💫 Async & Decoupled"]

subgraph BUS_CHANNELS["📡 Bus Channels"]

B1["thoughts.*"]

B2["blossom.*"]

B3["scorable.*"]

B4["nexus.*"]

end

end

%% ===== BLOSSOM RUNNER =====

subgraph BR["🌸 Blossom Runner Engine"]

VPM_HINT["🎨 VPM Jitter Hint<br/>💡 Optional Novelty"]

ATS["🌳 Agentic Tree Search<br/>🔍 M × N × L Rollout"]

K["🏆 Top-K Winners<br/>⭐ Best Candidates"]

SH["✨ Sharpen Loop<br/>🔧 LLM + Scorers"]

end

%% ===== SCORING LAYER =====

subgraph SC["📊 Multi-Dimensional Scoring"]

SCORE["⚖️ Scoring Service<br/>🎯 MRQ / SICQL / HRM / SVM"]

subgraph DIMS["📈 Quality Dimensions"]

D1["🎯 Alignment"]

D2["📚 Faithfulness"]

D3["🌐 Coverage"]

D4["💎 Clarity"]

D5["🔗 Coherence"]

end

end

%% ===== MEMORY & VISUALIZATION =====

subgraph MEM["💾 Memory & Visualization"]

NX["🕸️ Nexus Graph<br/>🧠 Nodes + 🔗 Edges"]

BS["📦 BlossomStore<br/>🌳 Run Artifacts"]

VPM["🎨 Visual Thought Maps<br/>📽️ VPMs & Garden Viewer"]

end

%% ===== DATA FLOW CONNECTIONS =====

%% Input → Scorable Processing

R -.->|"📥 normalize<br/>any input"| SP

G -.->|"🎯 attach goal<br/>+ context"| SP

SP -.->|"🏭 produce<br/>enriched scorable"| SCOR

%% Scorable + Goal into Blossom

SCOR -.->|"🌱 seed plan<br/>+ metrics"| ATS

G -.->|"📝 compose prompt<br/>+ strategy"| ATS

%% VPM Guidance for Novelty

VPM -.->|"🎨 Jitter hint<br/>boost novelty"| VPM_HINT -.-> ATS

%% Blossom Expansion + Sharpening

ATS -.->|"🌳 rollout forest<br/>M×N×L dimensions"| K

K -.->|"🔄 iterate<br/>improve quality"| SH

SH -.->|"✨ refined<br/>candidates"| K

%% Scoring & Promotion

K -.->|"📊 score candidates<br/>multi-dimensional"| SCORE

SCORE -.->|"🏆 promote winners<br/>Δ ≥ margin"| NX

%% Persistence & Storage

ATS -.->|"💾 nodes + edges<br/>episode structure"| BS

SH -.->|"📈 improved text<br/>+ scores"| BS

BS -.->|"🔄 write / update<br/>graph memory"| NX

%% Visualization & Feedback

NX -.->|"🎬 encode runs<br/>+ decisions"| VPM

%% ===== EVENT BUS WIRING =====

ATS -.-|"🌼 garden events<br/>node/edge updates"| EB

SH -.-|"⚡ progress events<br/>sharpening steps"| EB

NX -.-|"💓 pulses<br/>habit formations"| EB

EB -.-|"📺 real-time<br/>visualization"| VPM

%% ===== APPLY STYLES =====

class IN input

class BUS bus

class BR blossom

class SC scoring

class MEM memory

class G,R,SP,SCOR processor

class VPM_HINT,ATS,K,SH blossom

class SCORE,DIMS scoring

class NX,BS memory

class VPM visualization

class EB,BUS_CHANNELS bus

%% ===== SPECIAL HIGHLIGHTS =====

linkStyle 0 stroke:#4169E1,stroke-width:3px

linkStyle 1 stroke:#4169E1,stroke-width:3px

linkStyle 2 stroke:#4169E1,stroke-width:3px

linkStyle 3 stroke:#FF69B4,stroke-width:3px

linkStyle 4 stroke:#FF69B4,stroke-width:3px

linkStyle 5 stroke:#FF69B4,stroke-width:3px

linkStyle 6 stroke:#FF69B4,stroke-width:3px

linkStyle 7 stroke:#FF69B4,stroke-width:3px

linkStyle 8 stroke:#32CD32,stroke-width:3px

linkStyle 9 stroke:#32CD32,stroke-width:3px

linkStyle 10 stroke:#9D67AE,stroke-width:3px

linkStyle 11 stroke:#9D67AE,stroke-width:3px

linkStyle 12 stroke:#9D67AE,stroke-width:3px

linkStyle 13 stroke:#FF6347,stroke-width:3px

linkStyle 14 stroke:#FFD700,stroke-width:3px

linkStyle 15 stroke:#FFD700,stroke-width:3px

linkStyle 16 stroke:#FFD700,stroke-width:3px

linkStyle 17 stroke:#FFD700,stroke-width:3px

%% ===== SUBGRAPH STYLING =====

style IN fill:#E6E6FA,stroke:#8A2BE2,stroke-width:3px,color:black

style BUS fill:#FFFACD,stroke:#FFD700,stroke-width:3px,color:black

style BR fill:#FFE4E1,stroke:#FF69B4,stroke-width:3px,color:black

style SC fill:#F0FFF0,stroke:#32CD32,stroke-width:3px,color:black

style MEM fill:#F5F0FF,stroke:#9D67AE,stroke-width:3px,color:black

What this diagram is saying in words:

- Inputs go through the Scorable Processor so everything the system sees looks like a Scorable.

- Blossom uses those Scorables (and the goal) to grow a small reasoning forest.

- The Scoring layer evaluates that forest and decides what to promote.

- Nexus is where those promotions become long-term structure (nodes + edges).

- Memory keeps the detailed trace of each episode and all related information.

- VPM reads what happened and turns it into visual thought maps (and optionally feeds hints back in).

- The Event / Knowledge Bus carries all the “this happened” events between agents so the whole thing stays loosely coupled.

🕸️ Why Everything Is a Graph: The Architecture of Infinite Connection

We’ve just followed a single thought through Stephanie: it becomes a Scorable, blossoms into alternatives, gets scored, and lands in Nexus as a node.

Here’s the real trick:

When Stephanie solves a problem today, she doesn’t just produce an answer – she leaves behind a trace of how she got there.

That trace becomes the foundation for her next hundred solutions.

This isn’t just logging. It’s building a living cognitive ecosystem where every thought fertilizes the next.

So why build that ecosystem as a graph?

🧠 Our Belief: IWe Think in Graphs, Not Lists

Nexus exists because of a simple hypothesis:

Human cognition isn’t linear – it’s relational.

We don’t think in chains; we think in networks.

When you reason, you don’t walk a straight line. You bounce:

- from idea → example → counterexample

- from memory → analogy → refinement

- from “this worked before” → “let’s try a variation”

That’s a graph, not a pipeline.

Nexus makes that structure concrete:

- Every thought is a node.

- Every relationship (“leads to”, “explains”, “contradicts”, “refines”) is an edge.

- Every Blossom episode is its own local graph that gets merged into the global one.

Over time, Stephanie isn’t just answering questions – she’s growing a map of how she thinks.

🌐 Graphs All the Way Down (With a Concrete Example)

“Graphs all the way down” sounds cute; here’s what it actually means.

Imagine you ask:

“How can we model AI alignment in software?”

In Nexus, that might look like:

- Your question becomes Node A.

- Stephanie’s first attempt at an answer becomes Node B, with an edge

A → B(“addresses”). - Blossom runs on B and produces Nodes C, D, E – different refinements branching off that thought.

- After scoring, Node D wins and gets promoted; the

B → Dedge is strengthened as a “good path”. - Later, someone asks about cognitive safety. Nexus sees that D is relevant (via embeddings + graph neighbors) and links it into this new context.

Here’s the twist:

- The whole Blossom episode that created D – its search tree, scores, and decisions – is stored as its own Blossom graph.

- That entire subgraph can be treated as a single unit: embedded, scored, reused.

So you get:

- graphs of thoughts,

- graphs of reasoning episodes,

- and graphs where those graphs are the nodes.

If tensors are the basic unit of computation in PyTorch, graphs are the basic unit of cognition in Stephanie. A tensor is “an array of numbers”; a Nexus graph is “an array of thoughts and their relationships”.

♾️ The Infinite Connection Hypothesis

What we’re really building toward is bold:

An infinitely connected system that becomes more useful as it grows, instead of collapsing into chaos.

Every time Stephanie thinks:

- she adds nodes and edges,

- she reinforces good paths (habits),

- and she leaves behind a richer structure for the next thought.

Every action doesn’t just produce an answer – it makes the whole mind denser and easier to navigate.

⚖️ “Won’t This Turn Into a Hairball?” (Scaling Without Lying to Ourselves)

If your first reaction is “there’s no way this scales,” you’re thinking clearly.

A naive “connect everything to everything” graph would be unusable. Our answer is not “we’ll curate it by hand.” Our answer is:

Let the system help tend its own graph.

We lean on the rest of the architecture:

- Scoring tells us which nodes and paths are actually valuable.

- Blossom explores locally around promising areas instead of fanning out everywhere.

- Nexus can:

- promote strong paths and let weak edges fade,

- merge near-duplicates,

- collapse dense subgraphs into summaries.

- VPMs (Visual Thought Maps) let us see when regions are overgrown or underused, and train models to recognize “healthy” vs “noisy” structure.

The goal isn’t just “a bigger graph.” It’s a graph that’s actively organizing itself:

- dense regions solidify into expertise,

- sparse bridges become cross-domain links,

- empty spaces mark frontiers where new thinking is needed.

🧭 What This Unlocks

Building everything on Nexus is a bet:

- that representing reasoning as a graph of Scorables is closer to how we actually think,

- that the system can reuse reasoning, not just facts,

- and that with the right scoring + visualization, an “infinitely connected” mind can stay navigable, not chaotic.

This is why Nexus looks the way it does, and why Blossom, the Event Bus, the scorers, and VPMs all plug into it.

From here on, the rest of the post is about making this vision concrete:

- how we grow the graph,

- how we read it (Nexus views + VPMs),

- and how we’ll increasingly let Stephanie be her own gardener – deciding what to remember, what to compress, and where to explore next.

📈 Why graphs, not chains?

In the last section we argued that graphs are the natural fabric of thinking – that Stephanie should remember and reuse reasoning as a web, not a line.

As soon as we tried to build this “infinite graph” for real, we ran into the same problems the research community has been wrestling with. So instead of pretending we invented everything from scratch, we asked:

What prior work explains what’s working – and what’s wobbling – in Nexus?

That led us to three anchors:

- 🌳 Tree of Thoughts (ToT) – great at local exploration (branch / compare / prune).

- 🕸️ Graph of Thoughts (GoT) – great at long-horizon reuse (ideas as reconnectable nodes).

- 🌐 GraphRAG – great at global coherence (cluster, summarize, route).

They didn’t give us a blueprint. They gave us language, guard-rails, and composable ideas for what we’d already started building.

GoT: Graph of Thoughts: Solving Elaborate Problems with Large Language Models ToT: Tree of Thoughts: Deliberate Problem Solving with Large Language Models GraphRAG: From Local to Global: A Graph RAG Approach to Query-Focused Summarization🍖 The honest roast (and how we adapted each idea)

| 📄 Framework | 💡 What actually helped | ⚠️ Where it breaks in practice | 🔧 What we changed (Stephanie’s twist) | 🧬 Where it lives in Stephanie |

|---|---|---|---|---|

| 🌳 ToT | Forces alternatives before commitment; surfaces better local paths. | Branching factor explodes; LLMs self-plagiarize; “best” path ≠ stable over time. | We built Blossom: goal-aware sampling + novelty/diversity checks, then SICQL / EBT / HRM scoring with guard-rails. | 🌸 Blossom expansion & selection; inline Nexus metrics workers. |

| 🕸️ GoT | Treats ideas as nodes you can reconnect to later; edges capture reusable structure. | Naïve similarity → hairball cliques; edges become brittle; early nodes dominate. | Mutual-kNN, adaptive edge thresholds, temporal backtrack edges, consensus-walk ordering; run-level metrics to detect saturation. | 🕸️ Nexus node/edge builders + graph.json / run_metrics.json. |

| 🌐 GraphRAG | Clustering + summarization keeps the big picture coherent and query-focused. | Summaries drift; cluster collapse hides minority insights. | Validate clusters with goal_alignment, clustering_coeff, mean_edge_len, and keep raw exemplars alongside summaries. | Nexus exporters + VPM overlays (PyVis graphs, A/B smoke checks, filmstrips). |

Bottom line:

➡️ Chains are fine for one thought.

➡️ We needed graphs to remember, revisit, and compose thoughts across runs.

🟰 How those ideas map onto our components

-

🧩 Scorable → node (GoT)

Any datum becomes a Scorable seed (text, VPM tile, plan-trace step). The ScorableProcessor enriches it with domains, entities, embeddings – and that is the node we can reconnect later.

→ScorableProcessor,scorable_domains,scorable_entities,scorable_embeddings. -

🌸 Blossom → local search (ToT)

From a seed, Blossom generates variants, scores them, and keeps the winners. This is ToT’s useful core without the combinatorial hangover.

→ Blossom generator + inline scorers (SICQL / EBT / HRM / Tiny). -

🕸️ Nexus → global coherence (GraphRAG)

We stitch winners into a Graph of Thoughts, compute structure metrics, export frames/filmstrips, and use the graph itself (plus VPMs) to guide the next step.

→NexusInlineAgent→graph.json,frames.json,run_metrics.json,graph.html, VPM timelines.

Together, they give us local search, reusable nodes, and global structure in one loop.

🔥 What burned us (and how we fixed it)

Reality didn’t care about our diagrams. A few of the fires we had to put out:

-

📏 Length blow-ups in targeted runs

The model “tries harder” by writing novels.

→ Guard-rails in the smoke tool, length-aware scoring, and diversity-with-brevity heuristics in selection. -

🧱 Saturated scorers

Metrics likesicql.aggregate ≈ 100make deltas meaningless.

→ Expose raw sub-metrics (uncertainty, advantage) and add Tiny / HRM as sanity-check judges. -

🫧 Graph cliques & mushy neighborhoods

Everything starts to look similar; neighborhoods lose structure.

→ Clamp k for small-N, raise sim_threshold adaptively, add temporal backtrack edges, and monitor mutual_knn_frac + **clustering_coeff`. -

🎯 “Better” local scores, worse global structure

A path looks great locally but degrades the overall graph.

→ A/B at the run level: compare baseline vs targeted on both goal_alignment and graph health (edge length, clustering, component size, VPM patterns).

🍳 The working recipe (today)

- Seed: normalize anything into a Scorable.

- Enrich: attach domains, entities, embeddings, VPM tile.

- Blossom: generate candidates; score with SICQL/EBT/HRM; select under guard-rails.

- Trellis: insert into the Nexus graph; compute structure metrics; export frames.

- Prove: run A/B (random vs. goal-aware) and publish deltas.

We didn’t “implement ToT/GoT/GraphRAG.” We refit their best ideas into a loop that actually runs, measures itself, and improves one seed, one blossom, one graft at a time.

🧱 Storing a Living Graph: Nexus Database & Store

If Nexus is the graph of how Stephanie thinks, this is where that graph actually lives.

This isn’t “just storage.” It’s the physical memory of her mind:

- Every thought becomes a row.

- Every connection becomes an edge.

- Every activation leaves a pulse you can replay later.

We deliberately kept the stack boring so the behavior could be exciting:

- Postgres as long-term memory.

- A single store object (

NexusStore) as the only API agents can touch. - A handful of tables that map cleanly onto cognitive primitives.

You think in graphs. The database thinks in rows. The store is the translator between the two.

🗂 The core tables: ORM view of the mind

Under the hood, Nexus uses five ORM models. Each one corresponds to something cognitive, not just “a table”.

🧩 NexusScorableORM atomic thoughts

One row per Scorable:

id– stable external ID (chat turn, document hash, plan-step ID).text– the actual content (answer, snippet, plan step, etc.).target_type– what kind of thing this is ("document","answer","plan","snippet"…).domains,entities,meta– what it’s about, what it mentions, and where it came from.embedding,metrics– 1:1 children representing where it lives in vector space and how good it is.

If Stephanie has ever “thought” about something, it shows up here.

class NexusScorableORM(Base):

__tablename__ = "nexus_scorable"

id = Column(String, primary_key=True)

created_ts = Column(DateTime, default=datetime.utcnow, index=True)

chat_id = Column(String, index=True)

turn_index = Column(Integer, index=True)

target_type = Column(String, index=True)

text = Column(Text, nullable=True)

domains = Column(JSONB, nullable=True)

entities = Column(JSONB, nullable=True)

meta = Column(JSONB, nullable=True)

🧮 NexusEmbeddingORM semantic position (the “portable brain”)

Each Scorable gets a global embedding:

- Stored as JSONB for portability (

[float, ...]). - Optionally indexed with

pgvectorin production. - Used for KNN, clustering, and “find me things like this.”

class NexusEmbeddingORM(Base):

__tablename__ = "nexus_embedding"

scorable_id = Column(String, ForeignKey("nexus_scorable.id", ondelete="CASCADE"), primary_key=True)

embed_global = Column(JSONB, nullable=False)

norm_l2 = Column(Float, nullable=True)

Engineering note – “portable brain”:

NexusStore.knn() tries pgvector first (knn_pgvector) and falls back to pure-Python cosine (knn_python) if the DB doesn’t support it. That means:

- On your laptop: JSONB + Python KNN.

- In prod: pgvector index + ANN search.

Same agents, same API. Stephanie’s “semantic brain” is portable.

📊 NexusMetricsORM multi-dimensional judgment

This is where scorers leave their fingerprints:

columns– dimension names (["alignment","faithfulness","clarity", ...]).values– aligned float scores ([0.83, 0.79, 0.72, ...]).vector– convenient name→value map.

class NexusMetricsORM(Base):

__tablename__ = "nexus_metrics"

scorable_id = Column(String, ForeignKey("nexus_scorable.id", ondelete="CASCADE"), primary_key=True)

columns = Column(JSONB, nullable=False, default=list)

values = Column(JSONB, nullable=False, default=list)

vector = Column(JSONB, nullable=True)

This table powers:

- Graph health metrics,

- policy reports,

- and “did this Blossom actually improve things?” analysis.

🔗 NexusEdgeORM connections as habit strength

Edges are where thoughts become habits:

run_id– which graph slice we’re in ("live"vsrun_2024_11_01_frontier).src,dst– scorable IDs (logical foreign keys intonexus_scorable).type– what kind of relation this is ("knn_global","temporal_next","blossom_winner","shared_domain", …).weight– habit strength for this path.channels– per-dimension or per-experiment edge metadata.

class NexusEdgeORM(Base):

__tablename__ = "nexus_edge"

run_id = Column(String, primary_key=True)

src = Column(String, primary_key=True)

dst = Column(String, primary_key=True)

type = Column(String, primary_key=True)

weight = Column(Float, nullable=False, default=0.0)

channels = Column(JSONB, nullable=True)

created_ts = Column(DateTime, default=datetime.utcnow, index=True)

Every time a path helps solve a problem, its weight can increase. Over time, high-weight edges become the highways of Stephanie’s thinking.

❤️ NexusPulseORM cognitive observability

Pulses are snapshots of attention:

- “At time

ts, for goalG, Stephanie focused on scorableS, with this local neighborhood and subgraph size.”

class NexusPulseORM(Base):

__tablename__ = "nexus_pulse"

id = Column(Integer, primary_key=True, autoincrement=True)

ts = Column(DateTime, default=datetime.utcnow, index=True)

scorable_id = Column(String, nullable=False, index=True)

goal_id = Column(String, nullable=True, index=True)

score = Column(Float, nullable=True)

neighbors = Column(JSONB, nullable=True)

subgraph_size = Column(Integer, nullable=True)

meta = Column(JSONB, nullable=True)

This gives you cognitive observability:

- You can replay what the graph looked like when a decision was made.

- You can visualize which regions “lit up” during a run.

- You can debug bad decisions with real context, not vibes.

🧰 How agents actually use it: NexusStore, not raw ORM

Agents don’t import ORM classes. They talk to a single façade:

from stephanie.stores.nexus_store import NexusStore

store = NexusStore(session_maker, logger)

From their point of view, the API is semantic, not SQL:

# 1. Store a thought

store.upsert_scorable({

"id": scorable_id,

"chat_id": chat_id,

"turn_index": turn_index,

"target_type": "answer",

"text": answer_text,

"domains": domains,

"entities": entities,

"meta": {"source": "chat", "goal_id": goal_id},

})

# 2. Attach embedding + metrics

store.upsert_embedding(scorable_id, embedding_vec)

store.upsert_metrics(

scorable_id,

columns=["alignment","faithfulness","clarity"],

values=[0.83, 0.79, 0.72],

vector={"alignment":0.83,"faithfulness":0.79,"clarity":0.72},

)

# 3. Write graph structure for a run

store.write_edges(run_id, [

{"src": parent_id, "dst": child_id, "type": "blossom_candidate", "weight": 0.61},

{"src": parent_id, "dst": winner_id, "type": "blossom_winner", "weight": 0.74},

])

# 4. Record a pulse for UI + audit

store.record_pulse(

scorable_id=winner_id,

goal_id=goal_id,

score=0.74,

neighbors=[{"nid": nid, "sim": sim} for nid, sim in neighbors],

subgraph_size=len(neighbors),

meta={"run_id": run_id, "tag": "frontier_pick"},

)

From the agent’s perspective, there is no “database.” There is only “store this thought,” “connect these nodes,” “log this decision.”

NexusStore hides:

- sessions and transactions,

- pgvector vs Python fallback,

- and all the ORM plumbing.

📊 ER diagram: the schema of thought

Here’s the ER-style snapshot of the Nexus schema:

erDiagram

NEXUS_SCORABLE {

string id PK "Stable thought ID"

datetime created_ts "When thought formed"

string chat_id "Conversation context"

int turn_index "Position in dialogue"

string target_type "document|answer|plan|snippet"

text text "Raw content"

jsonb domains "Cognitive categories"

jsonb entities "Key concepts"

jsonb meta "Provenance & extras"

}

NEXUS_EMBEDDING {

string scorable_id PK,FK "Links to thought"

jsonb embed_global "Semantic vector"

float norm_l2 "Precomputed norm"

}

NEXUS_METRICS {

string scorable_id PK,FK "Links to thought"

jsonb columns "Dimensions"

jsonb values "Scores"

jsonb vector "Named scores"

}

NEXUS_EDGE {

string run_id PK "live | experiment"

string src PK "Source thought"

string dst PK "Target thought"

string type PK "knn_global|temporal_next|..."

float weight "Habit strength"

jsonb channels "Per-edge metadata"

datetime created_ts "When link formed"

}

NEXUS_PULSE {

int id PK "Heartbeat ID"

datetime ts "When it fired"

string scorable_id "Focused thought"

string goal_id "Current goal"

float score "Decision score"

jsonb neighbors "Local neighborhood"

int subgraph_size "Scope of context"

jsonb meta "Run-specific info"

}

NEXUS_SCORABLE ||--|| NEXUS_EMBEDDING : "has_embedding"

NEXUS_SCORABLE ||--|| NEXUS_METRICS : "has_metrics"

NEXUS_SCORABLE ||--o{ NEXUS_PULSE : "emits_pulses"

NEXUS_SCORABLE ||--o{ NEXUS_EDGE : "appears_as_src_or_dst"

🎯 Design principles

A few rules shaped this schema:

- Thoughts first: Scorables are the center; everything else hangs off them.

- Separation of concerns: content vs embeddings vs metrics vs structure vs activity.

- Run isolation:

run_idkeeps experiments from polluting live graphs. - JSON where it helps: enough flexibility to evolve without schema hell.

- Portable performance: same code on laptop and cluster; pgvector optional, not required.

- Store-only discipline: agents never write SQL; they perform cognitive operations.

In other words: this is the skeletal system of Stephanie’s mind.

Next, we’ll stop staring at the bones and look at how it behaves under load: how many nodes and edges we pushed through it, what the degree distributions look like, and whether thinking in graphs actually gets faster as the graph grows.

🧪 Benchmarking Reality: Can This Graph Keep Up With You?

It’s one thing to sketch an infinitely connected mind and talk about graphs and habits.

It’s another to ask the boring, brutal question a skeptic would:

On a regular consumer machine, does this thing actually keep up with a human day, or does it fall over?

So instead of guessing, we pushed the exact Nexus schema you just saw into a hard benchmark.

🖥️ The Setup: Real Schema, Real Database, No Tricks

On a local Postgres instance (standard Windows desktop, Postgres 16, default config), the benchmark script does this on a scratch database:

-

Reset the world

-

DROP TABLE IF EXISTS ...and recreate:nexus_scorable– synthetic “thoughts”nexus_metrics– per-thought scoresnexus_edge– graph edges

-

-

Generate a synthetic graph in memory

- 500,000 nodes → “thoughts” / Scorables

- 500,000 metrics rows → each thought gets

coverage+risk - 1,000,000 edges → random connections with different

types andweights

-

Bulk-load everything with

COPY- Write 2,000,000 rows (nodes + metrics + edges) to Postgres

-

Run a “Pulse” benchmark (this is real graph work, not a toy

SELECT 1) For each of 5,000 pulses:-

Pick a random source node

-

Join

nexus_edgeto find its neighbors for thisrun_id -

Join

nexus_metricsto read theircoverage -

Aggregate a weighted sum:

SUM(edge.weight * neighbor.coverage)- i.e. “how strong is the local neighborhood around this thought?”

-

-

Clean up after itself

- Drops the tables at the end, so you can rerun without polluting anything.

No caching tricks, no connection pool wizardry, no pgvector, no materialized views. Just vanilla SQL against the real tables.

📊 The Numbers (First Serious Touch to Disk)

On this setup, here’s what we get:

--- PostgreSQL Nexus Graph Benchmark ---

DB=co host=localhost:5432 | nodes=500,000 edges=1,000,000 pulses=5,000

Setting up Nexus tables (DROP + CREATE)...

Tables created.

Starting bulk write test: 500,000 nodes, 500,000 metrics, 1,000,000 edges...

Bulk insert completed in 58.78 seconds.

Total rows: 2,000,000

Throughput: 34,023 rows/second

Starting Pulse traversal test (5,000 pulses)...

Pulse traversal completed in 1.06 seconds.

Throughput: 4,696 pulses/second

--- Summary ---

Bulk load: 58.78s, 34,023 rows/s

Pulse: 1.06s, 4,696 pulses/s

Cleaning up Nexus tables (DROP)...

Cleanup complete.

Same thing, compact:

| 🔢 Metric | Value |

|---|---|

| Nodes (“thoughts”) | 500,000 |

| Edges (“connections”) | 1,000,000 |

| Total rows written | 2,000,000 |

| Bulk load throughput | ≈ 34,000 rows / second |

| Pulse throughput | ≈ 4,700 pulses / second |

🧠 What Is “500,000 Thoughts,” Really?

For a database person, 500k rows is small.

For a personal AI, 500k nodes is enormous:

- If a node is a message, note, or plan step, 500k nodes comfortably covers years of heavy daily usage.

- If a node is a snippet or paragraph, it’s on the order of thousands of documents / chats broken down into reusable pieces.

The benchmark:

- Loads that entire lifetime of thinking in under a minute.

- Then runs thousands of graph pulses per second over it.

And remember: each pulse is not just a key lookup. It’s:

- A graph traversal (

edgesfor a source node +run_id) - A join into metrics

- A weighted aggregation over a quality dimension (

coverage)

That’s exactly the shape of the queries a thinking companion needs to run all day.

🧍♀️ What This Means for a Personal AI Companion

Now put those numbers in human terms.

A “pulse” is the system asking:

“Given this thought, what’s nearby in my mind, and how strong is it?”

You want your companion to do that constantly:

- Every time you type a sentence

- Every time you open a doc

- Every time you revisit a project from months ago

At ~4,700 pulses per second:

-

You can run rich, background graph checks on every interaction and still feel instant responses.

-

The database is effectively bored most of the time which is perfect, because it leaves headroom for:

- Your local LLM (or remote models) to do actual reasoning

- VPM rendering and visualization

- Multiple specialized companions (coder, researcher, planner) sharing the same Nexus graph.

And because it’s this fast on your own machine, nothing about your graph of thoughts needs to leave the box.

Local-first by design: speed + efficiency mean your “second brain” can live with you, not in someone else’s data center.

🤨 “Isn’t a Hand-Rolled Graph on Postgres… Questionable?”

Totally fair critique.

Graph databases exist. Building our own graph layer on Postgres sounds, at first, like reinventing the wheel.

This benchmark is our first hard answer to that skepticism:

- We get ACID, migrations, joins, JSONB, and tooling from Postgres.

- We keep the schema simple and explicit: scorables, metrics, edges, pulses.

- We stay portable: the same design runs on a laptop, a workstation, or a small server.

- And even in this “dumb” form (no pgvector, no caching, no sharding), it already handles human-scale cognition easily.

For a single-user personal AI or a small team assistant, this isn’t just “good enough” it’s overkill in the right direction.

We’re not claiming this is the final architecture for a trillion-node, multi-tenant cloud brain. We’re saying:

On the very first serious touch to disk, this “questionable” graph is already past what you need for a deep, always-on companion.

✅ The Point of This Section

This is the “down to earth” moment in the post:

-

We’ve described an ambitious, graph-of-graphs cognitive system.

-

Here, we show that even a straightforward Postgres implementation can:

- Store hundreds of thousands of thoughts

- Maintain millions of connections

- Execute thousands of graph queries per second

- All on a consumer machine, with your data staying local.

With that foundation proven, we can safely move on to the fun part:

- Visualizing this activity as VPMs and garden films

- Layering smarter routing and scoring on top

- And actually behaving like a personal, persistent mind not just a chat window.

Perfect, that feedback is actually very aligned with what you’re trying to do here. Here’s an enhanced, self-contained Scorable section that:

- Leans into the “atom → molecule → graph” metaphor

- Uses concrete, recursive examples (conversation → messages → graphs → VPMs)

- Emphasizes the “universal interface” / everything is scorable

- Stays tight enough that it doesn’t feel like a whole new chapter

You can drop this straight into the blog as the “Scorable” section and tweak emojis/titles later.

⚛️ Atoms of Thought: Everything Is a Scorable

In the last section, we proved that our Nexus graph can run at human scale on a normal machine.

But that raises a deeper question:

If the graph is the brain, what are its atoms?

In Stephanie, the atomic unit of cognition is the Scorable.

Think of it like this:

- Scorables are atoms

- Subgraphs are molecules

- Whole Nexus graphs are organisms

Atoms combine into molecules. Scorables combine into subgraphs. Subgraphs combine into higher-order graphs. The entire system is built out of these same repeating units.

☢️ The Universal Shape of a Thought

When I say “Scorable”, I don’t just mean “a bit of text.”

flowchart TD

%% ===== RAW DATA SOURCES =====

A["💬 Conversation Turn"]

B["📄 Document"]

C["📋 Plan Trace"]

D["🖼️ VPM Image"]

E["🤖 Agent Output"]

F["📊 Nexus Graph"]

%% ===== SCORABLE FACTORY - THE UNIVERSAL ADAPTER =====

SF["🏭 ScorableFactory<br/>Universal Cognitive Adapter"]

A --> SF

B --> SF

C --> SF

D --> SF

E --> SF

F --> SF

%% ===== SCORABLE OUTPUTS =====

S1["🧩 Scorable: Conversation Turn"]

S2["🧩 Scorable: Document"]

S3["🧩 Scorable: Plan Trace"]

S4["🧩 Scorable: VPM"]

S5["🧩 Scorable: Agent Output"]

S6["🧩 Scorable: Nexus Graph"]

SF --> S1

SF --> S2

SF --> S3

SF --> S4

SF --> S5

SF --> S6

%% ===== NEXUS GRAPH - WHERE SCORABLES CONNECT =====

NG["🕸️ Nexus Graph<br/>Growing Mind"]

S1 --> NG

S2 --> NG

S3 --> NG

S4 --> NG

S5 --> NG

S6 --> NG

%% ===== RECURSIVE LOOP - GRAPHS BECOME SCORABLES =====

NG -->|"extract subgraph"| F

F -->|"becomes"| SF

%% ===== COGNITIVE PROCESSING =====

NG --> VG["🎨 VPM Generator"]

VG -->|"creates"| D

D --> SF

NG --> AN["🔍 Analysis Engine"]

AN -->|"produces"| E

E --> SF

%% ===== STYLING =====

style A fill:#8A2BE2,stroke:#333,stroke-width:2px,color:white

style B fill:#4169E1,stroke:#333,stroke-width:2px,color:white

style C fill:#32CD32,stroke:#333,stroke-width:2px,color:white

style D fill:#FF4500,stroke:#333,stroke-width:2px,color:white

style E fill:#FF8C00,stroke:#333,stroke-width:2px,color:white

style F fill:#9D67AE,stroke:#333,stroke-width:2px,color:white

style SF fill:#FFD700,stroke:#333,stroke-width:3px,color:black

style S1 fill:#8A2BE2,stroke:#333,stroke-width:2px,color:white

style S2 fill:#4169E1,stroke:#333,stroke-width:2px,color:white

style S3 fill:#32CD32,stroke:#333,stroke-width:2px,color:white

style S4 fill:#FF4500,stroke:#333,stroke-width:2px,color:white

style S5 fill:#FF8C00,stroke:#333,stroke-width:2px,color:white

style S6 fill:#9D67AE,stroke:#333,stroke-width:2px,color:white

style NG fill:#1E90FF,stroke:#333,stroke-width:3px,color:white

style VG fill:#FF6347,stroke:#333,stroke-width:2px,color:white

style AN fill:#20B2AA,stroke:#333,stroke-width:2px,color:white

classDef rawData fill:#8A2BE2,stroke:#333,color:white,stroke-width:2px

classDef factory fill:#FFD700,stroke:#333,color:black,stroke-width:3px

classDef scorable fill:#4c78a8,stroke:#333,color:white,stroke-width:2px

classDef nexus fill:#1E90FF,stroke:#333,color:white,stroke-width:3px

classDef processor fill:#FF6347,stroke:#333,color:white,stroke-width:2px

class A,B,C,D,E,F rawData

class SF factory

class S1,S2,S3,S4,S5,S6 scorable

class NG nexus

class VG,AN processor

In practice, Stephanie treats anything she might care about as a Scorable:

- A single user message or assistant reply

- A full conversation turn or an entire chat

- A document, section, theorem, or triple

- A PlanTrace or a single ExecutionStep

- A VPM (visual policy map snapshot)

- A Nexus graph summarizing a run

- Even another Stephanie run (“what that other agent just did”)

- Numbers, metrics, sliders, UI state – all wrap-able as Scorables

If it can be evaluated, compared, or connected, we treat it as a Scorable.

That’s the core rule:

Everything Stephanie thinks with is first normalized into the same Scorable shape.

This is what lets the same scoring and graph machinery work across all modalities.

🕸️ Graphs of Graphs: Concrete Example

The recursive bit (“a graph is a node in another graph”) can sound abstract, so here’s a concrete path:

-

Conversation level

- The whole conversation →

ScorableType.CONVERSATION - Each user↔assistant pair →

ScorableType.CONVERSATION_TURN - Each individual message →

ScorableType.CONVERSATION_MESSAGE

- The whole conversation →

-

Reasoning level

- Stephanie runs a pipeline to answer a hard question →

PlanTrace - That

PlanTracebecomes a Scorable →ScorableType.PLAN_TRACE - Each step in the plan becomes its own Scorable →

PLAN_TRACE_STEP

- Stephanie runs a pipeline to answer a hard question →

-

Graph level

- We add those Scorables into Nexus as nodes → they’re now part of the “thinking graph”

- We extract a subgraph of related thoughts and store that as a summary Scorable →

ScorableType.NEXUS_GRAPH

-

Visual level

- We encode that subgraph into a VPM image →

ScorableType.VPM - That VPM gets scored (“Is this reasoning healthy?”) like any other Scorable

- We encode that subgraph into a VPM image →

So you get a stack like:

message → turn → plan trace → subgraph → VPM → analysis

…and every single layer is just another Scorable.

Stephanie doesn’t need special paths for “reasoning about graphs” vs “reasoning about text” – it’s all the same shape.

🔘 The Scorable Contract: Minimal, but Universal

Under the hood, the Scorable class is intentionally tiny – the narrow waist everything passes through:

class Scorable:

def __init__(

self,

text: str,

id: str = "",

target_type: str = "custom",

meta: Dict[str, Any] = None,

domains: Dict[str, Any] = None,

ner: Dict[str, Any] = None,

):

self._id = id

self._text = text

self._target_type = target_type

self._metadata = meta or {}

self._domains = domains or {}

self._ner = ner or {}

At this level, a Scorable has just enough to be thought-like:

id– stable identity (so we can join back to DB rows, casebooks, graphs)target_type– what kind of thing it is (document,conversation_turn,plan_trace,vpm,nexus_graph, …)text– a canonical text view (for LLMs, loggers, some scorers)meta/domains/ner– optional annotations we already know

Importantly, it is not tied to any specific table or ORM. It’s a universal cognitive interface:

Any source that wants to enter the mind has to agree to speak “Scorable”.

🧰 ScorableFactory: Adapters from the World to the Mind

To get from “raw stuff in the system” to this clean shape, we use ScorableFactory.

It knows how to adapt everything we care about:

# Documents

doc_scorable = ScorableFactory.from_orm(DocumentORM(...))

# A section of a paper

section_scorable = ScorableFactory.from_orm(DocumentSectionORM(...))

# A user↔assistant turn

turn_scorable = ScorableFactory.from_orm(ChatTurnORM(...))

# A full reasoning trace

trace_scorable = ScorableFactory.from_plan_trace(plan_trace, goal_text)

# A VPM snapshot

vpm_scorable = ScorableFactory.from_dict(vpm_dict, target_type=ScorableType.VPM)

Conceptually, the path looks like:

Any source → ScorableFactory → Scorable → Nexus / scorers / VPMs / training

That means if we want Stephanie to start reasoning about UI layouts, telemetry streams, or another agent’s outputs, we don’t rewrite the brain – we just add a new adapter that turns them into Scorables.

🧬 Every Interface Is Scorable

This is the part that matters for the personal AI vision:

- Your chat UI can be a Scorable (so the system can score “interface clarity”).

- A cluster of notifications can be a Scorable (“is this overload?”).

- Another Stephanie instance can write a summary of its reasoning – that summary is a Scorable too.

- Even a Nexus run (the graph we built from your day) is a Scorable that future runs can look back on and judge.

Because of that, Stephanie can eventually reason about her own interfaces and tools using the same machinery she uses to reason about your questions.

Everything you see, and everything she does, can be pulled through the same Scorable funnel and made comparable.

🔗 How This Ties Back to the Graph & Benchmark

When we said in the previous section that we loaded 500,000 “thoughts” into the database and traversed them at 4,700 pulses/second, those “thoughts” are exactly these Scorables:

- Each row in

nexus_scorableis the persisted shell of a Scorable. - Each row in

nexus_metricsis “what the scorers thought about that Scorable.” - Each edge in

nexus_edgeis “how those Scorables relate.”

So the benchmark wasn’t about abstract rows – it was about how many Scorables your machine can comfortably handle.

And the answer is: for a single human, on a consumer box, more than enough.

⏭️ Next: The Scorable Processor – How Atoms Gain Structure

This section was about ontology:

What is a “thing we think about”? How do we make everything fit into one shape?

Next, we move from the atom to the muscle:

- How the ScorableProcessor takes these raw Scorables

- How it enriches them with domains, entities, embeddings, and metrics

- How that enrichment is standardized into feature rows that can be stored, graphed, and used to train better scorers

Once you see Scorables as the atoms of thought, the ScorableProcessor is what lets those atoms bind into molecules and, eventually, into a mind.

Here’s a cleaned-up, fully-integrated version of the Scorable Processor section, with your code + Nexus story + the best bits from the feedback folded in.

You can drop this straight into the post as the next major section after the Scorable / “atom of thought” section.

🧠 The Scorable Processor: Where Context Creates Meaning

In 1956, George Miller published “The Magical Number Seven, Plus or Minus Two” and made a simple point: we survive cognitive overload by chunking grouping raw bits into meaningful units so the mind can work with them.

The Scorable is Stephanie’s chunk: a single, universal atom of thought.

The Scorable Processor is where those atoms become usable cognition.

It doesn’t just “add features.” It takes a raw Scorable, looks at the goal and the context, and turns that atom into a feature-rich, goal-aware thought that can steer Nexus, train models, and even change the direction of a conversation.

This component is new in this release. We added it because the old, document-only pipelines simply broke the moment Nexus went live.

🌐 The Messy Reality the Processor Had to Fix

Early Stephanie pipelines assumed one thing:

“We are scoring documents.”

Then Nexus arrived and immediately invalidated that assumption.

Now, in a single run, Stephanie might be dealing with:

- A chat turn (“User asked this; assistant answered that”)

- A document section from a paper

- A PlanTrace step from a reasoning episode

- A VPM tile (an image distilled from metrics)

- A summary of another Stephanie run

- A random JSON blob from some external tool

They:

- Come from different places (DB rows, files, APIs, other AIs)

- Need different features

- Mean different things under different goals

A debugging run cares about very different signals to a research-evaluation run.

The Scorable Processor exists to solve that:

It forces all of these into a single cognitive format, then re-interprets them based on context.

🧭 Where It Sits in the Cognitive Stack

Here’s the Scorable Processor inside the larger flow:

flowchart TB

subgraph Sources["Sources: Anything Can Be Thought"]

A1["ChatTurnORM<br/>(User message, Assistant reply)"]

A2["DocumentORM<br/>(Paper, blog, code file)"]

A3["PlanTrace<br/>(Reasoning steps)"]

A4["VPM / Image<br/>(Visual Policy Map tiles)"]

A5["Other Stephanie run<br/>(Summary, metrics)"]

A6["Filesystem / Logs<br/>(JSON, text, traces)"]

end

Sources -->|normalize| F["ScorableFactory<br/>Universal adapter"]

F --> S["Scorable<br/>id • target_type • text • meta"]

S --> P["ScorableProcessor<br/>Context-aware enrichment"]

subgraph Ctx["Context"]

C1["goal_text"]

C2["pipeline_run_id"]

C3["parent_scorable"]

C4["current_graph / episode"]

end

Ctx --> P

subgraph Outputs["Thinking Surface"]

R["ScorableRow<br/>canonical feature row"]

D["scorable_domains<br/>(cognitive categories)"]

E["scorable_embeddings<br/>(semantic positions)"]

M["scorable_metrics<br/>(SICQL/HRM/Tiny/EBT)"]

V["VPM / vision_signals<br/>(visual policy maps)"]

MAN["manifest.json & features.jsonl<br/>(run artifacts)"]

end

P --> R

P --> D

P --> E

P --> M

P --> V

P --> MAN

subgraph Uses["Where These Thoughts Go"]

NX["Nexus Graph<br/>living memory"]

ZM["ZeroModel / Tiny Vision<br/>visual reasoning"]

B["Blossom / Arena<br/>exploration & selection"]

T["Trainers<br/>MRQ / SICQL / HRM / EBT"]

end

R --> NX

M --> NX

M --> B

M --> T

V --> ZM

classDef source fill:#f9f2d9,stroke:#faad14;

classDef processor fill:#e6f7ff,stroke:#1890ff;

classDef output fill:#f6ffed,stroke:#52c41a;

classDef uses fill:#fff7e6,stroke:#fa8c16;

class Sources source;

class P,Ctx processor;

class Outputs output;

class Uses uses;

Left: any source that can be turned into a Scorable. Middle: the Scorable Processor, where context creates meaning. Right: the surfaces Stephanie actually thinks on Nexus, VPMs, trainers, Arena.

🔁 From Static Datum to Contexted Thought

Conceptually, ScorableProcessor does three big jobs every time a Scorable shows up:

-

Hydrate – “What do we already know?”

- Reuse cached domains, entities, and embeddings from the DB

- Avoid recomputing expensive features when possible

-

Enrich (goal-aware) – “What does this mean right now?”

- Compute missing embeddings

- Infer domains with the real

ScorableClassifier - Run NER with

EntityDetector - Call the scoring stack (SICQL, HRM, Tiny, etc.) using the current goal

- Optionally generate a VPM via

ZeroModelService

-

Emit & Persist – “Make it usable everywhere.”

- Build a canonical

ScorableRow(the row Nexus and trainers expect) - Write domains/entities/embeddings to side-tables

- Append to

features.jsonlso runs are inspectable and repeatable

- Build a canonical

In code, at pipeline level, it looks like this:

processor = ScorableProcessor(cfg, memory, container, logger)

rows = await processor.process_many(

inputs=scorables, # List[Scorable or dict or ORM-backed objects]

context={

"pipeline_run_id": run_id,

"goal": {"goal_text": "Help the user debug their code"},

},

)

Same Scorable instance, different enriched view depending on the context you pass in.

🧬 Feature Layers: What Actually Gets Added

The implementation you saw in scorable_processor.py is long because it’s production-hardened, but conceptually it’s a fan-out over feature layers.

1. Hydration from providers

for provider in self.providers: # DomainDBProvider, EntityDBProvider, ...

acc.update(await provider.hydrate(scorable))

If this thought (or a near-duplicate) has been seen before, we pull back what we already know.

2. Embeddings (multi-backend)

emb = self.memory.embedding.get_or_create(scorable.text)

floats = self._ensure_float_list(emb)

if floats is not None:

acc.setdefault("embeddings", {})["global"] = floats

memory.embeddingcan be H-Net, HF, Ollama, or a mix.- The processor doesn’t care which model; it just normalizes to

List[float].

3. Domains (goal-conditioned)

need_domains = not acc.get("domains") or len(acc["domains"]) < self.cfg.get("min_domains", 1)

if need_domains:

inferred = self.domain_classifier.classify(text)

for name, score in inferred:

acc.setdefault("domains", []).append({"name": name, "score": score})

- Uses your real

ScorableClassifier(not the old toyguess_domain). - Seeds + goal + text decide whether this thought is

math,safety,evaluation,planning, etc.

4. Entities / NER

if self.entity_extractor and not acc.get("ner") and self.cfg.get("enable_ner_model", True):

ner = self.entity_extractor.detect_entities(text)

acc["ner"] = ner or []

- Names, tools, orgs, APIs, etc., become structured spans that other agents can latch onto.

5. Scorer metrics (SICQL / HRM / Tiny / …)

if self.scoring and self.cfg.get("attach_scores", True):

goal_text = Scorable.get_goal_text(scorable, context=context)

ctx = {"goal": {"goal_text": goal_text}, "pipeline_run_id": run_id}

vector: Dict[str, float] = {}

for name in self.scorers: # e.g. ["sicql", "hrm", "tiny"]

bundle = (

self.scoring.score_and_persist if self.persist

else self.scoring.score

)(

scorer_name=name,

scorable=scorable,

context=ctx,

dimensions=self.dimensions,

)

alias = self.scoring.get_model_name(name)

flat = bundle.flatten(numeric_only=True)

for k, v in flat.items():

vector[f"{alias}.{k}"] = float(v)

vector[f"{alias}.aggregate"] = float(bundle.aggregate())

acc["metrics_vector"] = vector

acc["metrics_columns"] = sorted(vector.keys())

acc["metrics_values"] = [vector[c] for c in acc["metrics_columns"]]

This is where a Scorable stops being “just text” and becomes a point in policy space:

- multiple scorers

- multiple dimensions (coverage, reasoning, faithfulness, risk, …)

- all packed into a single metrics vector

6. VPM / Vision signals (numbers → pixels)

if self.zm and acc.get("metrics_columns") and acc.get("metrics_values"):

vpm_u8_chw, meta = await self.zm.vpm_from_scorable(

scorable,

metrics_values=acc["metrics_values"],

metrics_columns=acc["metrics_columns"],

)

acc["vision_signals"] = vpm_u8_chw

acc["vision_signals_meta"] = meta

From here, ZeroModel and Tiny-vision models can read thoughts as images:

- One Scorable → metrics → Visual Policy Map

- Same atom, now visible as a tile in Stephanie’s visual cortex

7. Row build + side-table persistence

row_obj = self._build_features_row(scorable, acc)

row = row_obj.to_dict()

for writer in self.writers: # DomainDBWriter, EntityDBWriter, ...

await writer.persist(scorable, acc)

if self.enable_manifest:

await self.write_to_manifest(row)

ScorableRowbecomes the canonical “feature row” for Nexus & trainers.- Domains, entities, embeddings live in side-tables (no schema explosion).

features.jsonl+manifest.jsongive you a trail of what was computed, when, and with which models.

🎯 Same Text, Different Goal → Different Thought

The killer feature here is context conditioning.

The same text is not the same thought when your goal changes.

Take a simple line:

“The model’s accuracy improved by 12% after fine-tuning.”

As a debugging assistant:

- Domains:

programming,debugging,performance - Metrics emphasize:

helpfulness,concreteness,next_step_clarity - VPM: mostly green “this looks like a useful improvement signal”

As a research reviewer:

- Domains:

research,evaluation,reproducibility - Metrics emphasize:

rigour,reproducibility,significance - VPM: more yellow “where are the baselines, datasets, details?”

The text bytes are identical; the Scorable is the same object.

What changes is:

- the goal_text in the context

- the dimensions we request

- which parts of the metrics space we actually care about downstream

In code, you can see this directly:

turn = ScorableFactory.from_orm(chat_turn_orm)

rows_debug = await processor.process_many(

[turn],

context={"goal": {"goal_text": "Help the user debug their code"}},

)

rows_review = await processor.process_many(

[turn],

context={"goal": {"goal_text": "Evaluate the scientific strength of this claim"}},

)

Same input; two different enriched rows; two different places in the Nexus; likely two different Blossoms and training examples later.

The processor doesn’t just describe data; it re-casts it in light of what you’re trying to do.

That’s why a single Scorable can genuinely change the course of a conversation or a research run.

🔌 Extendable by Design

We know we’re not done inventing features.

So the Scorable Processor is built as a pluggable assembly line:

- Add a new embedding model → plug a new embedding backend into

memory.embedding. - Add a new domain classifier → add a provider/writer pair; turn it on in

cfg. - Add a new metric head (risk, vibe, creativity) → register a new scorer in

ScoringService. - Add a new visual representation → call out to a different VPM generator or vision encoder.

You don’t rewrite Nexus or the trainers. You don’t change the Scorable class.

You add another step on the assembly line and let the Processor enrich thoughts with a new view.

🧪 A Minimal Pipeline Stage Using the Processor

Here’s a trimmed stage that shows how this actually appears in a pipeline:

from stephanie.scoring.scorable import ScorableFactory

from stephanie.scoring.scorable_processor import ScorableProcessor

class ..FeaturesStage:

def __init__(self, cfg, memory, container, logger):

self.processor = ScorableProcessor(cfg.get("processor", {}), memory, container, logger)

self.input_key = cfg.get("input_key", "scorables")

self.output_key = cfg.get("output_key", "scorable_features")

async def run(self, context: dict) -> dict:

"""

Expects context[self.input_key] = List[dict|Scorable|ORM].

Produces:

- context[self.output_key] = List[dict] (ScorableRow dicts)

"""

raw_items = list(context.get(self.input_key) or [])

# 1) Normalize everything to Scorables

scorables = []

for item in raw_items:

if isinstance(item, str):

scorables.append(ScorableFactory.from_text(item, target_type="custom"))

elif hasattr(item, "__class__"): # ORM / PlanTrace / etc.

scorables.append(ScorableFactory.from_orm(item))

else:

scorables.append(ScorableFactory.from_dict(item))

# 2) Process with goal-aware context

goal_text = (context.get("goal") or {}).get("goal_text", "")

rows = await self.processor.process_many(

scorables,

context={

"pipeline_run_id": context.get("pipeline_run_id"),

"goal": {"goal_text": goal_text},

},

)

# 3) Stash for Nexus + training

context[self.output_key] = rows

return context

After this runs, every object document, chat turn, trace step, VPM is now a ScorableRow plus a set of side-table entries, ready for Nexus, ZeroModel, Arena, or any trainer to consume.

🌱 Why This Piece Is a Killer Feature

Without the Scorable Processor, Nexus would be:

- a graph of raw text blobs,

- tied to a single embedding model,

- blind to domains, entities, and context.

With it, every node in the graph becomes a dynamic, multi-view, goal-aware entity:

- It can be scored along dozens of dimensions.

- It can be seen as text, as metrics, or as a VPM image.

- It can be re-interpreted under new goals without touching the underlying data.

- It can pull the system in new directions when its scores or neighbors change.

This is where a “piece of data” becomes a decision point something that can bend the trajectory of a conversation, a research pipeline, or a training run.

Scorable gave us the atom. The Scorable Processor turns that atom into a force.

⛲ Features: The Intelligence Layer That Gives Thoughts Their Meaning

If the Scorable is the seed of thought and the Memory Tool is the soil where knowledge grows, then features are the nutrients that transform raw information into meaningful cognition.

Features are not just metadata – they’re the intelligence layer that allows Stephanie to see patterns, make connections, and understand context. They’re what turn a simple string of text into a rich, multidimensional thought that can participate in Stephanie’s cognitive ecosystem.

In practice, they’re exactly what the Scorable Processor (and its friends on the bus) produce: they take a raw Scorable and fan out into dozens of features, then hand them off to the Memory Tool and Nexus.

🪡 What Makes a Feature?

A feature is any piece of information that helps Stephanie understand, compare, or reason about a Scorable.

Unlike the Scorable itself (which is fixed once created), features can be:

-

Static – computed once and rarely changed

- e.g. embeddings, initial domains, extracted entities.

-

Dynamic – updated as new context or neighbors emerge

- e.g. graph centrality, relationship scores, usage counts.

-

Goal-dependent – computed differently based on the current objective

- e.g. “debug my code” vs “extract research claims”.

-

Transient – used only within a single run / PlanTrace

- e.g. temporary probe metrics, uncertainty traces, debugging flags.

This flexibility is crucial. It means the same Scorable can “mean” different things in different contexts: a paragraph might be a supporting argument in one discussion, a key insight in another, or a risk indicator in a safety review.

🪐 The Feature Ecosystem

Stephanie’s mind thrives on a diverse ecosystem of features, each serving a specific cognitive purpose. A (partial) map:

-

Semantic Features – the “what” of the thought

- Domains:

["cognitive_science", "ai_alignment", "graph_theory"] - Named entities:

["VPM", "Nexus", "Stephanie"] - Embeddings: 384–1024-dim vectors placing thoughts in semantic space

- Facts / triplets: subject–relation–object triples extracted from text

- Domains:

-

Quality Features – the “how good” of the thought (mostly from SICQL / EBT / HRM / Tiny)

- Clarity – how well-structured the reasoning is

- Faithfulness – alignment with source material

- Relevance – connection to the current goal

- Coherence – local logical flow

- Coverage – how comprehensively it addresses the topic

- Energy / uncertainty – how confident a model is in its own judgment

-

Reliability & Risk Features – the “how trustworthy / how dangerous”

- Agreement – consistency across multiple scorers (0–1)

- Stability – resistance to perturbations/paraphrases (0–1)

- Evidence count & diversity – how many supporting sources and domains

- Risk indicators – hallucination risk, safety risk, speculative vs factual

-

Temporal & Provenance Features – the “where and when it came from”

- Timestamps – created_at, updated_at, last_used_at

- Source agent / worker – which service produced this (

scorable_processor,vpm_worker_01,risk_scanner) - Model / prompt IDs – which model, config, or prompt template was used

- Run / arena IDs – which experiment, training run, or arena match it belongs to

- Lineage – parent Scorables, PlanTraces, MemCubes, bus messages

These are the features that make Learning-from-Learning possible: you can ask “who thought this, when, and under which policy?” and train the next generation accordingly.

-

Visual & Structural Features – the “how it looks and where it sits”

- VPM tiles / filmstrips – multi-channel images encoding policy/quality dimensions

- Spatial position – coordinates in the Nexus graph or VPM grid

- Graph metrics – degree, centrality, bridge scores, cluster membership

- Motifs – recurring local graph patterns: typical failure shapes, canonically good reasoning shapes, etc.

Not every Scorable needs every feature, and not every feature is persisted. Instead, features grow incrementally: some are attached synchronously by the Scorable Processor; others are added later by dedicated workers listening on the bus.

🌎 From Raw Scorable to Living Thought

Features are what transform Stephanie from a reactive system into a reflective one.

# A raw scorable (just data)

scorable = {

"id": "turn_142",

"text": "How do we make AI think better?",

"type": "conversation_turn",

}

# After feature enrichment (a living thought)

enriched = {

"scorable_id": "turn_142",

"domains": ["cognitive_science", "ai_alignment"],

"entities": ["AI", "think"],

"embed_global": [0.87, -0.23, ..., 0.41],

"metrics_vector": {

"clarity": 0.85,

"faithfulness": 0.92,

"relevance": 0.98,

},

"vpm_png": "vpm/turn_142.png",

"spatial_position": {"x": 234.5, "y": 187.2},

"provenance": {

"source_worker": "scorable_processor",

"run_id": "ssr-2025-11-18-0012",

"created_at": "2025-11-18T20:41:03Z",

},

}

Now Stephanie can:

- Recognize that this question connects to previous discussions about VPMs.

- Understand it’s highly relevant to the current goal of building cognitive systems.

- See that it’s clearer and more focused than similar past questions.

- Place it appropriately in the thought graph and trace who produced which scores, when.

Crucially, this enrichment is lazy and asynchronous. We don’t block the user while a VPM renders or a deep HRM pass runs. Those features arrive later, carried by messages on the bus, and are merged into the same Scorable over time.

🔄 The Feature Lifecycle

Features don’t all arrive at once. They follow a lifecycle that mirrors human cognition:

-

Perception – immediate, cheap signals

- domains, entities, basic embeddings, obvious risk tags.

-

Reflection – deeper evaluation

- multi-scorer quality metrics, uncertainty, agreement.

-

Integration – structural & visual embedding

- VPM generation, graph placement, structural metrics.

-

Consolidation – making it part of long-term habit

- caching, distillation into MemCubes / cartridges, policy updates.

flowchart TB

%% ==================== FEATURE LIFECYCLE ====================

subgraph L["🔄 Feature Lifecycle"]

P["🎯 Perception<br/>📝 Domains • Entities • Embeddings"]

R["📊 Reflection<br/>⚖️ SICQL • EBT • HRM • Tiny"]

I["🕸️ Integration<br/>🎨 VPM • Graph Placement"]

C["💾 Consolidation<br/>💫 Cache • Habits • Distillation"]

P --> R

R --> I

I --> C

end

%% ==================== OUTCOME DECISION ====================

C --> H{"✨ Outcome Evaluation"}

H -- "✅ Positive Impact" --> S["📈 Strengthen Paths<br/>🔗 Edges • Weights • Policies"]

H -- "❌ Needs Improvement" --> W["🌱 Encourage Exploration<br/>✂️ Prune • Adjust • Adapt"]

S --> P

W --> P

%% ==================== COMBINATORIAL INTELLIGENCE ====================

subgraph CI["🧠 Combinatorial Intelligence"]

direction TB

subgraph FGroup["💎 Quality Features"]

F1["🎯 Clarity"]

F2["🔗 Coherence"]

F3["📚 Evidence Count"]

F4["🎪 Domain Alignment"]

end

subgraph VGroup["🌈 Visual Features"]

V1["⚖️ VPM Symmetry"]

V2["🕸️ Spatial Centrality"]

end

M["✨ Feature Fusion<br/>🎯 Weighted • Learned • Dynamic"]

U["🏆 Merit & Understanding<br/>💡 Insight • Value • Impact"]

FGroup --> M

VGroup --> M

M --> U

end

%% ==================== CROSS-CONNECTIONS ====================

R -. "📥 Feeds Metrics" .-> FGroup

I -. "🔄 Updates Context" .-> VGroup

U --> C

%% ==================== STYLING ====================

classDef lifecycle fill:#4c78a8,stroke:#2c4a6e,stroke-width:3px,color:white,font-weight:bold

classDef perception fill:#8A2BE2,stroke:#6a1bb8,stroke-width:2px,color:white

classDef reflection fill:#4169E1,stroke:#2a4a9e,stroke-width:2px,color:white

classDef integration fill:#1E90FF,stroke:#0a70d6,stroke-width:2px,color:white

classDef consolidation fill:#00BFFF,stroke:#0099cc,stroke-width:2px,color:white

classDef decision fill:#FF8C00,stroke:#cc7000,stroke-width:3px,color:white,font-weight:bold

classDef positive fill:#32CD32,stroke:#28a428,stroke-width:2px,color:white

classDef negative fill:#FF4500,stroke:#cc3700,stroke-width:2px,color:white

classDef intelligence fill:#72b7b2,stroke:#4f8d88,stroke-width:3px,color:white,font-weight:bold

classDef quality fill:#98FB98,stroke:#7ad97a,stroke-width:2px,color:#2d4a2d

classDef visual fill:#FFA500,stroke:#cc8400,stroke-width:2px,color:#4a3800

classDef fusion fill:#9d67ae,stroke:#7d4f8e,stroke-width:2px,color:white

classDef output fill:#2E8B57,stroke:#246b43,stroke-width:2px,color:white

%% Apply styles

class L lifecycle

class P perception

class R reflection

class I integration

class C consolidation

class H decision

class S positive

class W negative

class CI intelligence

class F1,F2,F3,F4 quality

class V1,V2 visual

class M fusion

class U output

%% Edge styling

linkStyle default stroke:#666,stroke-width:2px

linkStyle 0,1,2,3,4,5,6,7,8,9 stroke-width:2px

linkStyle 10,11 stroke:#666,stroke-width:2px,stroke-dasharray:5 5

linkStyle 12 stroke:#2E8B57,stroke-width:3px

%% Special edge for outcome paths

linkStyle 6 stroke:#32CD32,stroke-width:3px

linkStyle 7 stroke:#FF4500,stroke-width:3px

The “magic” is in the combinations:

- High clarity + low evidence → confident but under-supported reasoning.

- Strong domain alignment + high coherence → likely insight.

- High risk + high graph centrality → must-review node.

In Stephanie’s mind, features are the difference between processing information and experiencing cognition. They allow her to see not just what a thought is, but what it means, how reliable it is, and how it connects to everything else.

They’re also the common language between the Scorable Processor, the Memory Tool, the Nexus graph, and the distributed workers you’ll see next. The message bus doesn’t move “documents” around – it moves Scorables plus feature updates.

💾 The Memory Tool: Building the Left Hemisphere of Stephanie’s Mind

If the Nexus is Stephanie’s right hemisphere – the place where thoughts bloom and connect – then the Memory Tool is her left hemisphere: the structured, organized repository of everything she knows.

It’s not just a database; it’s the foundation of her ability to learn, recall, and build upon past experiences. It’s where enriched Scorables, their features, and their provenance ultimately come to rest.

Think of it like this:

- Scorable Processor + workers + bus → move and enrich thoughts.

- Memory Tool → keeps the parts that matter, long-term.

📇 Why Memory Matters for an AI Mind

Human cognition relies on two key memory systems:

-

Short-term working memory – for immediate reasoning

- In Stephanie: Nexus graphs, current PlanTraces, in-flight bus messages.

-

Long-term semantic memory – for durable knowledge and experience

- In Stephanie: the Memory Tool and its stores.

Without the Memory Tool, Stephanie would be amnesiac. Every run would be a fresh start. With it, she can:

- Recall past conversations and decisions.

- Build on previous insights instead of rediscovering them.

- Recognize patterns across time and across users.

- Continuously improve her reasoning based on what actually worked (and what failed).

This isn’t just storage; it’s cognitive scaffolding.

☯️ One Interface, Many Memories

The Memory Tool solves a nasty problem: Stephanie needs many different kinds of memory (documents, chats, embeddings, evaluations, traces, MemCubes…), but the rest of the system shouldn’t have to know which table or engine each one lives in.

So internally you get specialized stores; externally you get one tool.

class MemoryTool:

def __init__(self, cfg: dict, logger: Any):

self._stores = {}

# Register embedding stores

mxbai = EmbeddingStore(embedding_cfg, memory=self, logger=logger)

hnet = HNetEmbeddingStore(embedding_cfg, memory=self, logger=logger)

hf = HuggingFaceEmbeddingStore(embedding_cfg, memory=self, logger=logger)

self.register_store(mxbai)

self.register_store(hnet)

self.register_store(hf)

# Choose default embedding backend

backend = embedding_cfg.get("backend", "hnet")

self.embedding = {"hnet": hnet, "huggingface": hf}.get(backend, mxbai)

# Register the rest of the long-term memory

self.register_store(DocumentStore(self.session_maker, logger))

self.register_store(ConversationStore(self.session_maker, logger))

self.register_store(PlanTraceStore(self.session_maker, logger))

self.register_store(EvaluationStore(self.session_maker, logger))

self.register_store(MemCubeStore(self.session_maker, logger))

# …50+ more stores

Design rule:

Specialized storage, unified access. Each store handles one type of data with optimized queries, while the Memory Tool provides a single point of entry for everything.

The Scorable Processor and feature workers write into it. Nexus, trainers, dashboards, and other agents mostly read from it.

💠 The Pattern: ORM → Store → Memory Tool

Every long-term memory type follows the same three-step pattern:

-

ORM model – defines the schema: