From Photo Albums to Movies: Teaching AI to See Its Own Progress

🥱 TLDR

This post details the implementation of:

- PACS: Implicit Actor–Critic Coupling via a Supervised Learning Framework for RLVR

- NER Retriever: Zero-Shot Named Entity Retrieval with Type-Aware Embeddings within our self-improving AI, Stephanie.

The core idea is to move beyond static, single-point feedback to a richer, more dynamic form of learning:

- CBR we built a memory of what worked in the past.

- PACS enables her to learn from entire problem-solving trajectories, teaching her how she improves over time instead of just if a single answer was good.

- NER provides the contextual glue, allowing us to understand and connect the specific concepts and entities—like people, methods, and papers—within her knowledge base.

By combining these, we’ve developed a form of Recurrent Learning from Human Feedback (RLHF)². This approach uses entire conversations and their resulting artifacts (blogs, code, etc.) as a dense reward signal for training, which is a significant step beyond traditional single-point supervision.

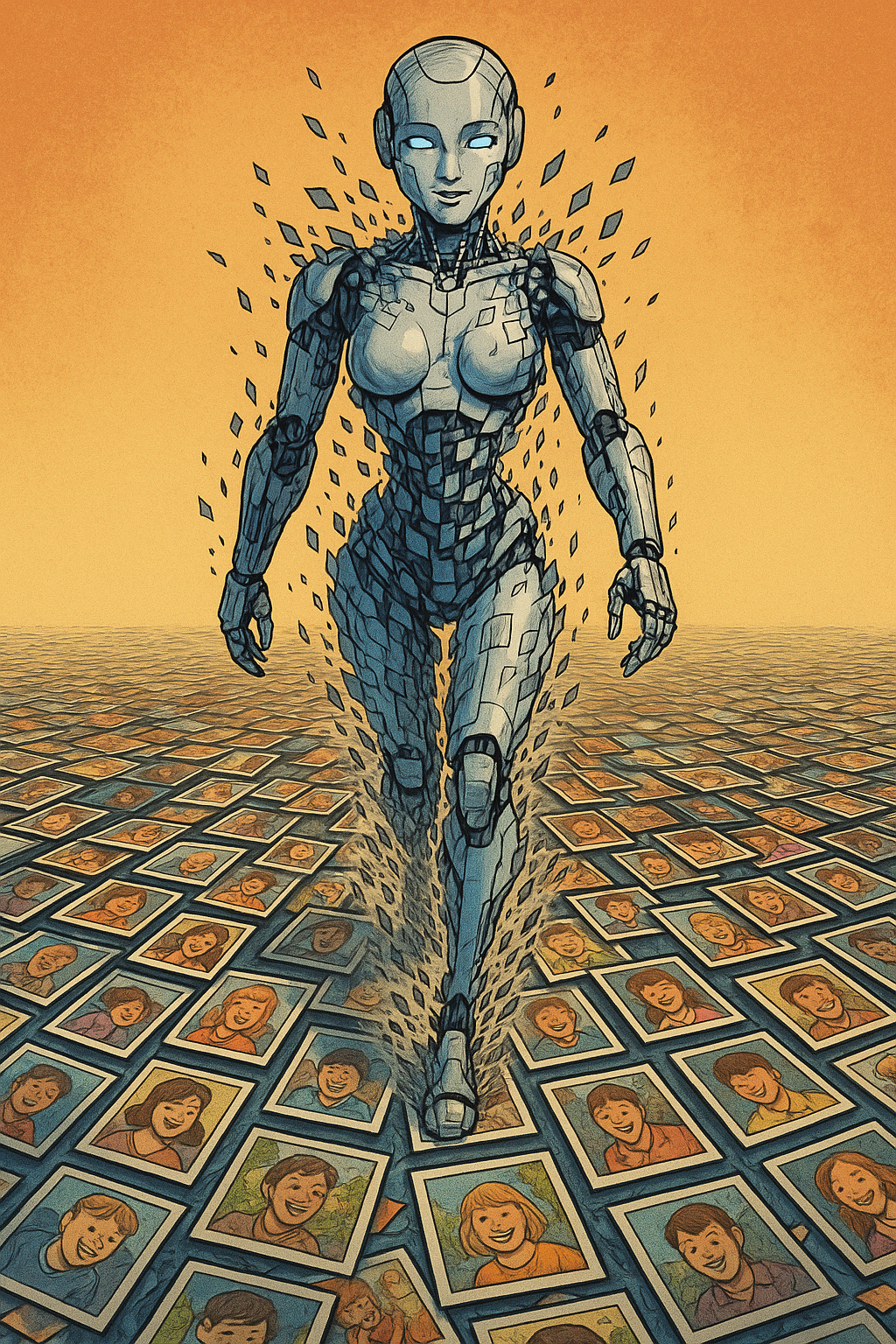

🎥 From Photo Albums to Movies: Why We Needed PACS

Most AI training today is like flipping through a photo album. Each case is judged in isolation: “Was this one answer good?” That’s what Case-Based Reasoning (CBR) gave us the ability to remember snapshots of past interactions and reuse them when useful.

But intelligence isn’t just about snapshots. It’s about trajectories. It’s about recognizing:

- Did we get better over time?

- Did each attempt build on the last?

- Can we measure improvement as a continuous process, not a one-off judgment?

That’s why we built PACS Policy Alignment through Case Sequences.

Where CBR was a photo album, PACS is a movie. It doesn’t just store moments; it tracks how those moments change.

This idea builds on the recent paper PACS: Implicit Actor–Critic Coupling via a Supervised Learning Framework for RLVR , which introduced a way to replace unstable reinforcement learning rewards with a supervised actor–critic coupling. We’ve taken that insight and applied it at a systems level inside Stephanie: CaseBooks become trajectories, scorers provide stable supervision, and the whole loop becomes measurable.

🔁 The PACS Training Loop: How It Works

PACS takes Stephanie’s CaseBooks (structured histories of conversations, code reviews, or documents or …) and turns them into a supervised actor–critic training loop.

flowchart TD

A["📚 CaseBook <br/> (Trajectory of Attempts)"]:::case --> B["📊 RLVR Dataset"]:::data

B --> C["🎲 Sample Group <br/> of Responses"]:::gen

C --> D["⚖️ Compute r̂ <br/> (Online vs Ref)"]:::calc

D --> E["🔄 Compute ψ via RLOO"]:::calc

E --> F["📉 BCE Loss"]:::loss

F --> G["🚀 Update Policy"]:::train

G --> H["📡 Coupling Ratio"]:::monitor

H -->|✅ Stable| I["✨ Skill Extracted"]:::good

H -->|⚠️ Unstable| J["♻️ Reset Reference"]:::bad

I --> A

J --> A

classDef case fill:#f5f5dc,stroke:#333,stroke-width:1px;

classDef data fill:#d9f0ff,stroke:#333,stroke-width:1px;

classDef gen fill:#ffe4d9,stroke:#333,stroke-width:1px;

classDef calc fill:#f0d9ff,stroke:#333,stroke-width:1px;

classDef loss fill:#ffd9ec,stroke:#333,stroke-width:1px;

classDef train fill:#d9ffd9,stroke:#333,stroke-width:1px;

classDef monitor fill:#fff3b0,stroke:#333,stroke-width:1px;

classDef good fill:#a7f3d0,stroke:#333,stroke-width:1px;

classDef bad fill:#fecaca,stroke:#333,stroke-width:1px;

Here’s why this matters:

- CaseBooks become curricula: Instead of isolated examples, Stephanie now trains on trajectories of reasoning.

- RLOO stabilizes learning: Each response is judged relative to others, preventing collapse into trivial answers.

- Coupling ratio monitors balance: We track whether the actor (generator) and critic (verifier) are in sync, ensuring the policy improves without drifting.

- Supervision replaces noisy rewards: Unlike RLHF’s unstable reinforcement signals, PACS gives direct, stable supervision anchored in Stephanie’s CaseBooks.

The result: Stephanie isn’t just storing experiences anymore. She’s learning how to improve from them.

😱 Guided by Four Voices

The way we’ve been building this system hasn’t been linear. It’s more like a gradual progression, a series of steps that stretch across posts, conversations, and experiments.

At first, it was simple: implement a research assistant. That was post one. From there, each post added a new piece scoring, embeddings, case-based reasoning, plan traces. Each step made the entity a little smarter, a little more capable of processing data.

I often think of it as the drunk man analogy: at the start, the man stumbles forward, barely able to stay upright. But with every step, he steadies himself, processing more data, making more sense, taking longer strides.

And here’s the important part: we haven’t been doing this alone. We’ve been guided by four different AIs, each with its own specialty:

- OpenAI (ChatGPT): Writes exceptionally well, and always sees the bigger picture.

- Qwen: A coder’s ally fast, precise, and structured in its approach.

- DeepSeek: Unbelievably strong at implementation, with a completely different coding style.

- Gemini: Offers ideas the others don’t, and brings a critical reviewer’s eye to the process.

Every blog post we’ve published is a snapshot of this process. We don’t write posts as decoration we write them because they are a reward signal. A blog post proves that we understood what we built. If we can explain it, illustrate it, and reference it, then we know we’ve internalized it.

In fact, the blog post itself has become a kind of evaluation metric:

- A short post with no code might mean the step was small.

- A long post with diagrams, images, and references means the step was significant, that we absorbed and implemented a real idea.

CaseBooks take this one step further. They tie everything together the conversations across four AIs, the implementations in GitHub, and the blog posts that mark the milestones. They turn a scattered process into a coherent trajectory of reasoning, reflection, and growth.

♻️ RLHF²: Learning From the Learning

For the past four months, every piece of this system Stephanie, ZeroModel, PACS, and every blog post you’re reading now has been co-built in real time across thousands of conversations with four different AIs.

Each chat message wasn’t just dialogue; it was a training signal. Each code commit wasn’t just implementation; it was applied reinforcement.

Now, for the first time, we can tie it all together:

- Conversations link directly to GitHub commits through their timestamps.

- The reasoning we captured in blog posts becomes a structured training corpus.

- We can trace which ideas sparked which code changes, and how those changes improved her own ability to learn.

This isn’t just Reinforcement Learning from Human Feedback (RLHF). It’s RLHF² Reinforcement Learning from Applied Human + AI Feedback.

Foundation models can’t bridge this gap. They can’t hold four months of evolving context, millions of lines of code, and tens of thousands of reasoning steps, all aligned to a singular goal: building a self-improving AI.

But we can now… so can you. And that’s the point.

flowchart TD

subgraph Input["Input: Human-AI Collaboration"]

A[🧑💻 Human User] --> B["🗨️ Multi-Agent Conversations"]

end

B -- "Raw Data" --> C["📥 Chat Import & CaseBook Creation"]

C -- "Structured Trajectories" --> D[🤖 PACS Training]

subgraph Output["Output: Generated Artifacts"]

D --> E["📄 Documents & Blog Posts"]

D --> F["💻 Code & Implementations"]

D --> G["🧠 Knowledge & Insights"]

end

Output -- "Applied Feedback & New Goals" --> A

H["♻️ RLHF²

Reinforcement Learning from

Applied Human + AI Feedback"] -.-> C

H -.-> D

style H fill:#ffa500,stroke:#333,stroke-width:3px,color:white

style D fill:#2ca02c,stroke:#333,stroke-width:3px,color:white

Your singular goal might be different medical research, legal analysis, even building a personal AI companion. The process is the same: you capture not just results, but the improvement trajectory itself. That trajectory becomes the curriculum, and the AI learns not just to answer, but to get better at answering over time.

🧩 The CaseBook: From Collection to Curriculum

In the original CBR design, a CaseBook was essentially a namespace a way to organize individual cases under a shared label. Useful, but flat. With PACS and longitudinal learning, we’ve redefined it:

CaseBook is no longer just a collection. It’s a curriculum tied to a single goal.

🏋 The Enhanced CaseBook ORM

Our updated schema makes this explicit:

class CaseBookORM(Base):

__tablename__ = "casebooks"

id = Column(Integer, primary_key=True, autoincrement=True)

name = Column(String, nullable=False, unique=True)

description = Column(Text, default="") # The overarching goal

pipeline_run_id = Column(Integer, nullable=True, index=True)

agent_name = Column(String, nullable=True, index=True)

tag = Column(String, nullable=False, default="default")

created_at = Column(DateTime, default=datetime.utcnow, nullable=False)

# e.g., {"avg_score": 0.85, "progress": 0.7}

meta = Column(SA_JSON, nullable=True)

cases = relationship("CaseORM", back_populates="casebook",

cascade="all, delete-orphan")

Each CaseBook has:

- A goal context (description, pipeline run, agent).

- A timeline (created_at).

- Meta stats (scores, progress, state).

- A curriculum of cases linked back to it.

A CaseORM represents each step in the curriculum, tied to one or more Scorables:

class CaseORM(Base):

__tablename__ = "cases"

id = Column(Integer, primary_key=True, autoincrement=True)

casebook_id = Column(Integer, ForeignKey("casebooks.id"), nullable=False)

goal_id = Column(Integer, ForeignKey("goals.id"), nullable=True)

prompt_text = Column(Text, nullable=True)

agent_name = Column(Text, nullable=True)

created_at = Column(DateTime, default=datetime.now)

scorables = relationship("CaseScorableORM", back_populates="case")

casebook = relationship("CaseBookORM", back_populates="cases")

And the CaseScorableORM links concrete outputs to scoring and metadata:

class CaseScorableORM(Base):

__tablename__ = "case_scorables"

id = Column(Integer, primary_key=True, autoincrement=True)

case_id = Column(Integer, ForeignKey("cases.id",

ondelete="CASCADE"), nullable=False)

scorable_id = Column(String, nullable=False)

role = Column(String, nullable=False, default="input")

rank = Column(Integer, nullable=True)

meta = Column(SA_JSON, nullable=True)

created_at = Column(DateTime, default=datetime.utcnow,

nullable=False)

📖 From Storage to Curriculum

With this ORM, the CaseBookStore now supports curriculum-level operations:

- Scoped CaseBooks: You can ensure CaseBooks tied to specific runs, agents, or tags (

ensure_casebook_scope). - Champion tracking: For each goal, a champion case (the best attempt so far) is tracked and updated with

upsert_goal_state. - A/B results: Improvements are logged with deltas, wins/losses, and trust scores.

- Recent pool queries: You can query recent or accepted cases for replay and training.

Example:

# Promote a new champion if quality improved

store.upsert_goal_state(

casebook_id=cb.id,

goal_id=goal.id,

case_id=case.id,

quality=0.92,

only_if_better=True,

improved=True,

delta=+0.05,

)

This isn’t just bookkeeping. It means we can:

- Compare early vs. late attempts in a goal.

- Track measured improvement between cases.

- Replay only champion trajectories for stable training.

🧮 Lessons you keep

The result is that CaseBooks evolve from being static folders of examples into dynamic curricula. Each one tells the story of a goal not only what was tried, but what worked, what improved, and what the current best solution is.

That’s the foundation PACS needs: structured, auditable trajectories where supervised signals replace the instability of ad-hoc rewards.

🔄 Applications of Casebooks

CaseBooks give us the structure. But the real question is: what fills them?

ONe answer is the conversations we have every day with the four AI agents OpenAI, Qwen, DeepSeek, and Gemini. Each conversation is a trajectory of prompts, responses, critiques, corrections, and refinements. Some are short a handful of turns. Others stretch into the hundreds.

This is where the relationship becomes symbiotic. Traditionally, reinforcement learning with human feedback (RLHF) relies on a person giving a simple thumbs-up or thumbs-down after a single model response. That’s a powerful technique, but it’s limited: one label, one answer, one moment in time.

What we’re doing here is different:

- Every chat becomes feedback. Instead of a single label, we have a stream of attempts, critiques, and revisions.

- Every participant adds signal. The four AI agents aren’t just answering they’re pushing, pulling, and reshaping each other’s outputs.

- Every trajectory matters. A CaseBook isn’t built from one judgment, but from hundreds of interconnected steps leading to a goal.

You can think of it as RLHF squared: instead of single-point supervision, we’re supervising across entire conversations, often hundreds of turns long, and treating the trajectory itself as the reward signal.

This is what the Chat Importer makes possible. It takes the raw mess of these multi-agent discussions and turns them into CaseBooks structured, deduplicated, and tied to goals so that we can make sense of.

flowchart TD

subgraph "Input: Human-AI Collaboration"

A[🧑💻 Human User] --> B["🗨️ Multi-Agent Conversations<br/>with OpenAI, Qwen,<br/>DeepSeek, Gemini"]

end

subgraph "Processing: Chat Import & Scoring"

B --> C["📥 Chat Importer<br/>(Structures & Deduplicates)"]

C --> D["📚 CaseBooks Creation<br/>(Trajectory Organization)"]

D --> E["⚖️ Universal Scoring<br/>(Multi-dimensional Evaluation)"]

end

subgraph "Generated Artifacts"

E --> F["📄 Documents & Blog Posts"]

E --> G["💻 Code & Implementations"]

E --> H["🧠 Knowledge & Insights"]

end

subgraph "Continuous Improvement"

F --> I["📊 Evaluation & Analysis"]

G --> I

H --> I

I --> J["🔄 Model Updates<br/>(PACS Training)"]

J --> K["🚀 Improved Stephanie"]

K --> B

end

style A fill:#e1f5fe,stroke:#01579b,stroke-width:2px

style B fill:#f3e5f5,stroke:#4a148c,stroke-width:2px

style C fill:#fff3e0,stroke:#e65100,stroke-width:2px

style D fill:#e8f5e9,stroke:#1b5e20,stroke-width:2px

style E fill:#fff9c4,stroke:#f57f17,stroke-width:2px

style F fill:#ffebee,stroke:#c62828,stroke-width:2px

style G fill:#e0f7fa,stroke:#006064,stroke-width:2px

style H fill:#fce4ec,stroke:#880e4f,stroke-width:2px

style I fill:#e8eaf6,stroke:#283593,stroke-width:2px

style J fill:#fff8e1,stroke:#ff6f00,stroke-width:2px

style K fill:#cfc,stroke:#333,stroke-width:3px

Can you see it. This is how we step beyond the foundation models.

And while in this post we’ve focused on chats as the primary source of CaseBooks, the design is far more general. Any interaction that has an input, an output, and a judgment can be wrapped in a CaseBook:

- Web searches → queries, results, refinements

- Code reviews → pull requests, comments, commits

- Document summaries → multiple drafts, clarity scores

- Logs or model outputs → errors traced to resolutions

- Model evaluations → scorables tied back to their origins

This is the real power of the framework: a CaseBook is not limited to conversations. It is the interaction unit between you and an AI whether that interaction happens in natural language, code, search, or system traces. Each CaseBook can itself evolve into larger CaseBooks, creating a recursive structure where anything can be traced, scored, and improved.

🛠️ From Chats to Training: The PACS Pipeline

flowchart LR

A["📂 Chat Import<br><span style='color:#888'>Parse raw logs (JSON/HTML)</span>"]:::stage -->

B["📚 Chat → CaseBook<br><span style='color:#888'>Group into Cases & Scorables</span>"]:::stage -->

C["⚖️ Scoring<br><span style='color:#888'>Evaluate across dimensions</span>"]:::stage -->

D["🗂️ CaseBook Prep<br><span style='color:#888'>Aggregate & build dataset</span>"]:::stage -->

E["🤖 PACS Training<br><span style='color:#888'>Supervised actor–critic</span>"]:::final

classDef stage fill:#1f77b4,stroke:#333,stroke-width:2px,color:#fff;

classDef final fill:#2ca02c,stroke:#333,stroke-width:3px,color:#fff;

It’s one thing to talk about CaseBooks and PACS in the abstract, but how do we actually turn raw conversations into training signals? That’s where the PACS training pipeline comes in a structured sequence of agents that transforms messy chat logs into a dataset we can learn from.

Here’s the full pipeline:

pipeline:

name: pacs_trainer_pipeline

tag: pacs

description: >

Implementation of the PACS framework, where raw multi-agent chats are imported,

transformed into CaseBooks, scored, and used to train models on longitudinal

signals.

stages:

- name: chat_import

description: "Import raw chat logs (JSON/HTML) and normalize into ORM objects"

- name: chat_to_casebook

description: "Convert conversations into CaseBooks with Cases + Scorables"

- name: universal_scorer

description: "Score conversations across multiple dimensions"

- name: casebook_preparation

description: "Aggregate scored CaseBooks into training-ready datasets"

- name: pacs_trainer

description: "Train actor–critic coupling with supervised signals"

🔄 Step by Step

-

Chat Import We start with raw exports from our AI collaborators (OpenAI, Qwen, DeepSeek, Gemini). The

ChatImportAgentparses them, deduplicates files, and stores them as structured objects in memory. This is where messy history becomes clean data. -

Chat → CaseBook Next, the

ChatToCaseBookAgentgroups conversations into CaseBooks. Each CaseBook is tied to a goal (e.g., “design PACS”), and contains Cases + Scorables built from the conversation turns. This step gives structure and purpose to otherwise flat text. -

Scoring Raw conversations aren’t useful until we evaluate them. The

UniversalScorerAgentscores every Scorable across multiple dimensions like clarity, novelty, and alignment, using ensemble scorers (SICQL, HRM, EBT). Now we have dense reward signals, not just raw text. -

CaseBook Preparation The

CaseBookPreparationAgentaggregates scored cases, tracks improvements over time, and builds training-ready datasets. This is where CaseBooks turn into actual learning trajectories. -

PACS Training Finally, the

PACSTrainerAgenttakes the prepared datasets and applies the PACS algorithm. Instead of unstable reinforcement learning, we use supervised signals to couple the actor and critic. This is the step where Stephanie actually learns from her history.

🚀 An example process

Training isn’t magic. It’s a process and the pipeline ensures that process is transparent, repeatable, and extensible:

- Each stage is an agent, so it can be swapped, extended, or improved independently.

- Reports and logs from each stage make the entire workflow auditable.

- The separation of concerns (import → structure → score → prepare → train) means we can track exactly how raw chats become learned skills.

This isn’t just about training PACS once. It’s about building the infrastructure that lets us continuously improve as new conversations, scores, and cases are added.

🗨️ The Chat Importer: From Messy Logs to Teachable Moments

If CaseBooks are the curriculum, then conversations with AI are the raw classroom notes. But raw notes are messy full of repetition, tangents, and dead-ends. To turn them into something we can actually learn from, we need a pipeline that can:

flowchart LR

A["📂 Chat Import<br><span style='color:#888'>Parse raw logs (JSON/HTML)</span>"]:::firstStage -->

B["📚 Chat → CaseBook<br><span style='color:#888'>Group into Cases & Scorables</span>"]:::stage -->

C["⚖️ Scoring<br><span style='color:#888'>Evaluate across dimensions</span>"]:::stage -->

D["🗂️ CaseBook Prep<br><span style='color:#888'>Aggregate & build dataset</span>"]:::stage -->

E["🤖 PACS Training<br><span style='color:#888'>Supervised actor–critic</span>"]:::final

classDef firstStage fill:#ff7f0e,stroke:#333,stroke-width:3px,color:#fff;

classDef stage fill:#1f77b4,stroke:#333,stroke-width:2px,color:#fff;

classDef final fill:#2ca02c,stroke:#333,stroke-width:3px,color:#fff;

- Parse chat logs from multiple agents (OpenAI, Qwen, DeepSeek, Gemini).

- Extract the essential user → assistant turns.

- Deduplicate them so the same point isn’t learned twice.

- Transform them into CaseBooks: structured trajectories tied to goals.

That’s the job of the Chat Importer. Think of it as RLHF squared: reinforcement learning from hundreds of human–AI turns per conversation, not just a handful. Some of our paper discussions run to 300–400 exchanges. Every one of those exchanges is a potential training signal.

Here’s how the flow works:

graph TD

A["💾 Chat Exports<br/>(JSON/HTML)"] -->|Parse| B[📥 Chat Importer Tool]

B -->|Hash Check| C{Duplicate?}

C -->|Yes| D[Skip File]

C -->|No| E[Create ChatConversationORM]

E --> F[💬 ChatMessageORM]

F --> G[🔄 ChatTurnORM]

G -->|Transform| H[📚 CaseBookORM]

H --> I[CaseORM + CaseScorableORM]

I --> J[⚖️ Scoring + NER]

J --> K[🤖 PACS Training]

💾 Structuring Conversations: The ORM Layer

To make sense of chats, we built a schema that enforces order and meaning:

- ChatConversationORM → one conversation export.

- ChatMessageORM → every individual message.

- ChatTurnORM → links a user prompt with the assistant’s response.

class ChatConversationORM(Base):

__tablename__ = "chat_conversations"

# links to messages and turns

id = Column(Integer, primary_key=True, autoincrement=True)

provider = Column(String, nullable=False, default="openai")

external_id = Column(String, nullable=True) # "conversation_id" from JSON

title = Column(String, nullable=False)

created_at = Column(DateTime, default=datetime.now)

updated_at = Column(DateTime, default=datetime.now)

meta = Column(JSON, default={})

messages = relationship(

"ChatMessageORM",

back_populates="conversation",

cascade="all, delete-orphan",

order_by="ChatMessageORM.order_index",

)

turns = relationship("ChatTurnORM", back_populates="conversation")

class ChatMessageORM(Base):

__tablename__ = "chat_messages"

id = Column(Integer, primary_key=True, autoincrement=True)

conversation_id = Column(

Integer,

ForeignKey("chat_conversations.id", ondelete="CASCADE"),

nullable=False,

)

role = Column(String, nullable=False) # "user", "assistant", "system", "tool"

text = Column(Text, nullable=True)

parent_id = Column(

Integer,

ForeignKey("chat_messages.id", ondelete="CASCADE"),

nullable=True,

)

order_index = Column(Integer, nullable=False)

created_at = Column(DateTime, default=datetime.utcnow)

meta = Column(JSON, default={})

conversation = relationship("ChatConversationORM", back_populates="messages")

parent = relationship(

"ChatMessageORM",

remote_side=[id],

backref="children",

foreign_keys=[parent_id],

)

class ChatTurnORM(Base):

__tablename__ = "chat_turns"

id = Column(Integer, primary_key=True, autoincrement=True)

conversation_id = Column(

Integer, ForeignKey("chat_conversations.id", ondelete="CASCADE")

)

user_message_id = Column(

Integer, ForeignKey("chat_messages.id", ondelete="CASCADE")

)

assistant_message_id = Column(

Integer, ForeignKey("chat_messages.id", ondelete="CASCADE")

)

conversation = relationship("ChatConversationORM", back_populates="turns")

user_message = relationship("ChatMessageORM", foreign_keys=[user_message_id])

assistant_message = relationship("ChatMessageORM",

foreign_keys=[assistant_message_id])

This hierarchy isolates the dialogue turns that matter for training while keeping full metadata (provider, timestamps, parent/child threads).

🗂️ The ChatStore: Managing Persistence

The ChatStore is the service layer for working with these ORMs. It handles:

- Duplicate detection with file hashes.

- Adding conversations and messages.

- Building turns from chronological message sequences.

- Purging/resetting the chat database when needed.

class ChatStore:

def exists_conversation(self, file_hash: str) -> bool: ...

def add_conversation(self, data: dict) ->

ChatConversationORM: ...

def add_messages(self, conv_id: int, messages: list[dict]) ->

list[ChatMessageORM]: ...

def add_turns(self, conversation_id: int, messages: list) ->

list[ChatTurnORM]: ...

This ensures the importer can scale to millions of messages without collapsing under noise or duplication.

🧑💻 The Agent: Orchestrating the Import

The ChatImportAgent is the orchestrator. It:

- Watches a directory for new chat exports.

- Logs progress and errors.

- Calls the

import_conversationstool to do the heavy lifting. - Reports back with a structured summary.

class ChatImportAgent(BaseAgent):

async def run(self, context: dict) -> dict:

summary = import_conversations(self.memory,

self.import_path, context=context)

self.logger.log("ChatImportSuccess", summary)

context["chat_imported"] = True

return context

This makes importing conversations as easy as dropping files into a folder.

🐦🔥 Chat → CaseBook Agent: Turning Conversations Into Learning Material

Once we have the raw chat logs, we need to transform them into something we can actually learn from. That’s the job of the ChatToCaseBookAgent.

flowchart LR

A["📂 Chat Import<br><span style='color:#888'>Parse raw logs (JSON/HTML)</span>"]:::stage -->

B["📚 Chat → CaseBook<br><span style='color:#888'>Group into Cases & Scorables</span>"]:::currentStage -->

C["⚖️ Scoring<br><span style='color:#888'>Evaluate across dimensions</span>"]:::stage -->

D["🗂️ CaseBook Prep<br><span style='color:#888'>Aggregate & build dataset</span>"]:::stage -->

E["🤖 PACS Training<br><span style='color:#888'>Supervised actor–critic</span>"]:::final

classDef currentStage fill:#ff7f0e,stroke:#333,stroke-width:3px,color:#fff;

classDef stage fill:#1f77b4,stroke:#333,stroke-width:2px,color:#fff;

classDef final fill:#2ca02c,stroke:#333,stroke-width:3px,color:#fff;

Think of it like an editor who takes a messy transcript and turns it into a structured report. This agent reads through each imported chat conversation and converts it into a CaseBook—a structured set of cases and scorables that we can train on.

Why It Matters

- Granularity control: Sometimes we want to evaluate an entire conversation as one unit (did the session achieve its goal?). Other times, it’s more useful to score turns (user → assistant exchanges) or even individual messages.

- Goal linkage: Each CaseBook is tied back to the goal that conversation was about (e.g. “improve PACS trainer code”). This makes sure the training isn’t just noise—it’s always anchored to intent.

- Trajectory learning: Instead of isolated examples, CaseBooks capture the flow of reasoning within a conversation. That’s what makes them perfect training material for PACS.

🤹 How It Works

Here’s the high-level process:

flowchart LR

A["💬 Chat Conversation"]:::source --> B["📚 CaseBook"]:::case

B --> C["📝 Cases"]:::case

C --> D["🎯 Scorables"]:::scorable

D --> E["⚖️ Scoring + Training"]:::train

classDef source fill:#1f77b4,stroke:#333,stroke-width:2px,color:#fff;

classDef case fill:#9467bd,stroke:#333,stroke-width:2px,color:#fff;

classDef scorable fill:#ff7f0e,stroke:#333,stroke-width:2px,color:#fff;

classDef train fill:#2ca02c,stroke:#333,stroke-width:3px,color:#fff;

🪽 Modes of Conversion

The agent supports three levels of detail:

-

Conversation mode → one Case per entire conversation (best for tracking overall goal achievement)

-

Turn mode → one Case per user → assistant turn (best for learning dialogue dynamics)

-

Message mode → one Case per individual message (best for fine-grained entity/link analysis)

📢 Reporting Every Step

The agent doesn’t just silently convert data. It logs detailed events for each stage:

- ✅ CaseBook created

- 🎯 Goal linked

- ✨ Scorables generated

- 📦 Case created

This means we can trace exactly what data went in, what structure came out, and where errors happened. That makes the system auditable and trustworthy.

👉 In short: this agent is the bridge between messy human–AI conversations and structured, reusable knowledge. Without it, Stephanie would just have chat logs. With it, she has training material for continuous improvement.

Finally, the importer pivots from conversation data to training data. The key function is conversation_to_casebook, which:

-

Ensures a CaseBook exists for the conversation.

-

Extracts user → assistant turns.

-

Deduplicates with a turn hash:

def _turn_hash(user_text: str, assistant_text: str) -> str: key = (user_text.strip() + "||" + assistant_text.strip()).encode("utf-8") return hashlib.sha256(key).hexdigest() -

Adds each turn as a Case with a Scorable.

This is the moment when messy logs crystallize into structured, reusable knowledge. Each prompt–response pair is no longer just text it’s a Case, tied to a goal, ready to be scored, linked with NER, and used in PACS training.

🌱 Applied Reinforcement Learning from Human Feedback

The Chat Importer turns RLHF into RLHF²:

- Not just single-turn corrections, but full trajectories of improvement.

- Not just external judgment, but internal scoring (HRM, SICQL, MARS).

- Not just static logs, but structured CaseBooks that grow with every project.

It’s a symbiotic loop: we use AI to explore ideas, and Stephanie uses those explorations to improve herself.

⚖️ The Universal Scorer: Giving Feedback to Every Case

CaseBooks give a structured curriculum of past attempts. But structure alone isn’t enough we need evaluation. Without feedback, even the best-organized curriculum is just a diary.

That’s where the Universal Scorer comes in.

flowchart LR

A["📂 Chat Import<br><span style='color:#888'>Parse raw logs (JSON/HTML)</span>"]:::stage -->

B["📚 Chat → CaseBook<br><span style='color:#888'>Group into Cases & Scorables</span>"]:::stage -->

C["⚖️ Scoring<br><span style='color:#888'>Evaluate across dimensions</span>"]:::currentStage -->

D["🗂️ CaseBook Prep<br><span style='color:#888'>Aggregate & build dataset</span>"]:::stage -->

E["🤖 PACS Training<br><span style='color:#888'>Supervised actor–critic</span>"]:::final

classDef currentStage fill:#ff7f0e,stroke:#333,stroke-width:3px,color:#fff;

classDef stage fill:#1f77b4,stroke:#333,stroke-width:2px,color:#fff;

classDef final fill:#2ca02c,stroke:#333,stroke-width:3px,color:#fff;

Think of it as Stephanie’s grading system. It takes any Scorable object documents, prompts, hypotheses, cartridges, theorems, triples, even imported chat turns and evaluates them across multiple dimensions:

- Novelty is this genuinely new, or just a repeat?

- Clarity is the reasoning easy to follow?

- Relevance does it connect to the goal at hand?

- Implementability can this idea be turned into code?

- Alignment does it fit our intended direction or constraints?

These aren’t just abstract numbers. They’re the feedback signals that fuel PACS-style training: supervised, dense, and consistent across entire trajectories.

🧑💻 The Scorer Agent in Code

Here’s a simplified version of the agent:

class UniversalScorerAgent(BaseAgent):

"""

Scores any scorable object (documents, cartridges, theorems, triples, etc.)

if not already scored. Uses an ensemble of configured scorers.

"""

def __init__(self, cfg, memory, logger):

super().__init__(cfg, memory, logger)

self.enabled_scorers = cfg.get("enabled_scorers", ["sicql"])

self.dimensions = cfg.get("dimensions", [

"novelty", "clarity", "relevance", "implementability", "alignment"

])

self.include_mars = cfg.get("include_mars", True)

self.ranker = ScorableRanker(cfg, memory, logger)

async def run(self, context: dict) -> dict:

candidates = self._gather_candidates(context)

results = []

for obj, ttype in tqdm(candidates, desc="Scoring Scorables"):

scorable = ScorableFactory.from_dict(obj, ttype)

# Skip if already scored

if self._already_scored(scorable, ttype):

continue

# Run ensemble of scorers

scored, bundle = self._score_item(context, scorable, ttype)

results.append(scored)

context[self.output_key] = results

return context

At its core, the agent:

- Collects candidates from memory (documents, prompts, case scorables, etc.).

- Runs each scorable through one or more scorers (

SICQL,EBT,MRQ,SVM, or even LLM baselines). - Logs and persists results into memory for later training.

🧮 Scoring in Practice

Suppose we’ve imported a conversation where DeepSeek drafted PACS training code and OpenAI critiqued it. Both turns become CaseScorables. The Universal Scorer evaluates them:

{

"scorable_id": 12345,

"text": "class PACSTrainer(BaseTrainer): ...",

"scores": {

"clarity::sicql": {"score": 0.82, "rationale":

"Well-structured class, clear naming."},

"novelty::sicql": {"score": 0.71, "rationale":

"Builds on previous trainers with new coupling logic."},

"implementability::sicql": {"score": 0.89, "rationale":

"Ready to integrate with CaseBooks."}

}

}

Each score includes a dimension, a source, and a rationale. These aren’t just numbers they’re training labels for PACS.

🔬 Beyond Single Scorers: MARS and Ranking

Two extra components amplify the Universal Scorer:

- MARSCalculator compares multiple scorers (e.g., SICQL vs. HRM vs. EBT) to measure agreement, uncertainty, and bias.

- ScorableRanker lets us rank the “top-k” candidates for a goal, ensuring Stephanie focuses on the most promising ideas.

Together, they provide a richer, multi-perspective evaluation system.

📏 Measured information

- From raw chats to evaluated cases we’ve now closed the loop between input and feedback.

- Dense reward signals unlike RLHF’s thumbs-up/thumbs-down, each case yields a vector of supervised scores.

- Curriculum-level learning when combined with CaseBooks, these scores become trajectories of improvement.

This is how Stephanie transforms conversations into usable training data: every case isn’t just remembered, it’s judged, contextualized, and ready to guide her growth.

Perfect this is where your blog post can pivot from scoring to supervised learning. Right now you’ve explained how Stephanie scores cases → now we show how those scores become datasets for PACS training.

Here’s a draft section you can drop into your post after the scoring part:

📦 From CaseBooks to Training Data

Scoring on its own isn’t enough the real power comes when we convert scored memory into supervised signals.

Every CaseBook in Stephanie already contains:

- ✅ The goal (what problem we were solving)

- ✅ The prompt or initial plan

- ✅ The Scorables (documents, hypotheses, traces)

- ✅ Their scores across dimensions (alignment, reasoning, stability, etc.)

By pairing these, we can generate dense labeled datasets. This is exactly what PACS needs: trajectories of improvement, expressed as training samples.

🧩 Class: CaseBookToRLVRDataset

The CaseBookToRLVRDataset takes a CaseBook and produces RLVR items (Reinforcement Learning from Verified Rewards).

class CaseBookToRLVRDataset:

def __init__(self, memory, casebook_name: str, scoring, dimensions=None):

self.memory = memory

self.casebook_name = casebook_name

self.scoring = scoring

self.dimensions = dimensions or ["alignment"]

def build(self) -> List[RLVRItem]:

casebook = self.memory.casebooks.get_by_name(self.casebook_name)

if not casebook:

raise ValueError(f"CaseBook '{self.casebook_name}' not found")

dataset: List[RLVRItem] = []

for case in casebook.cases:

goal = self.memory.goals.get_by_id(case.goal_id)

goal_text = goal.goal_text if goal else ""

prompt = case.prompt_text or goal_text

for i, sc in enumerate(case.scorables):

for j, sc_other in enumerate(case.scorables):

if j <= i: # avoid duplicates

continue

meta = {

"goal_id": case.goal_id,

"goal_text": goal_text,

"case_id": case.id,

"scorable_id": sc.id,

"competitor_id": sc_other.id,

"created_at": str(sc.created_at),

}

source = goal_text or prompt

reward_a = self.scoring.score(

"sicql",

scorable=ScorableFactory.from_orm(sc),

context=_as_context(source),

dimensions=self.dimensions,

).aggregate()

reward_b = self.scoring.score(

"sicql",

scorable=ScorableFactory.from_orm(sc_other),

context=_as_context(source),

dimensions=self.dimensions,

).aggregate()

dataset.append(RLVRItem(prompt, sc.text, reward_a, meta))

dataset.append(RLVRItem(prompt, sc_other.text, reward_b, meta))

return dataset

Each case becomes pairwise comparisons of Scorables, with rewards attached.

🤖 Agent: CaseBookPreparationAgent

To make this automatic inside pipelines, we wrap the dataset builder in an agent:

class CaseBookPreparationAgent(BaseAgent):

"""Prepares CaseBook data for PACS training"""

async def run(self, context: dict) -> dict:

recent_casebooks = self.memory.casebooks.list_casebooks()

dataset = []

for cb in recent_casebooks:

builder = CaseBookToRLVRDataset(

memory=self.memory,

casebook_name=cb.name,

scoring=self.scoring,

dimensions=self.dimensions,

)

dataset.extend(builder.build() or [])

context.update(

{

"rlvr_dataset": dataset,

"casebook_names": [cb.name for cb in recent_casebooks],

}

)

return context

This stage automatically:

- 📚 Loads CaseBooks

- 🔎 Extracts Scorables

- 🎯 Scores them pairwise

- 🏷️ Produces RLVRItems ready for training

📊 Why It Matters

With this pipeline, memory turns into training data:

- Every reasoning attempt is reusable

- Scores become dense rewards

- CaseBooks evolve into supervised datasets

This closes the loop from Retrieve → Reuse → Revise → Retain into Train.

PACS isn’t just about evaluating it’s about converting evaluation into learning fuel.

flowchart TD

A[📚 CaseBook<br/>Goals + Scorables + Scores] --> B[🔎 CaseBookToRLVRDataset<br/>Pairwise scoring + rewards]

B --> C["📦 RLVR Dataset<br/>RLVRItem(query, text, reward, meta)"]

C --> D[🧑🏫 PACS Trainer<br/>Supervised Actor–Critic Loop]

D --> E[🤖 Updated Models<br/>Improved Policies + Value Heads]

subgraph "Memory Layer"

A

B

C

end

subgraph "Training Loop"

D --> E

end

👉 Next, we’ll show how this dataset plugs into the PACS trainer, teaching Stephanie to learn from her own memory.

⚙️ The PACSTrainerAgent

The PACSTrainerAgent takes these datasets and runs dimension-by-dimension training with the PACS algorithm:

class PACSTrainerAgent(BaseAgent):

async def run(self, context: dict) -> dict:

# Step 1: Build dataset from CaseBooks

dataset = ScoredRLVRDataset(self.memory, self.dimensions).build()

# Step 2: Train PACS models

results = {}

for dimension in self.dimensions:

stats = self.trainer.train(dataset, dimension)

results[dimension] = stats

# Step 3: Save results

context[self.output_key] = {

"training_stats": results,

"casebook": self.casebook_name,

}

return context

Every run is logged with:

- Number of samples used.

- Dimension being trained.

- Training loss curves and accuracy.

- Any errors (e.g. not enough samples).

📊 Applied AI

By coupling Universal Scoring → RLVR Dataset → PACSTrainer, Stephanie gains:

- Trajectory-level supervision not just isolated judgments, but learning from improvement across cases.

- Multi-dimensional feedback novelty, clarity, relevance, implementability, alignment all become training signals.

- Stability supervised signals avoid the instability of raw RLHF-style reward hacking.

This is the foundation for an AI that doesn’t just remember what it tried, but systematically improves based on measured progress.

🏗️ Inside the PACS Trainer

So far we’ve seen how CaseBooks are turned into scored datasets, and how the PACSTrainerAgent orchestrates training runs. But the real contribution is the PACS Trainer itself a fully supervised implementation of actor–critic coupling with RLOO regularization, designed to integrate directly with Stephanie’s SICQL infrastructure.

This file is huge and that’s intentional. It contains almost everything the paper described, but made concrete in code:

- Data pipeline (

RLVRItem,RLVRDataset): minimal but composable. - Configuration system (

PACSConfig): lets us control sampling, optimization, early stopping. - Loss functions (

WeightedBCEWithLogits): numerically stable and class-balanced. - Hybrid adapter (

HybridSICQLAdapter): the key bridge that lets us use either the LM’s logprobs or SICQL’s critic heads. - Core trainer (

PACSCoreTrainer): implements r̂, ψ, and RLOO, tracks metrics, and updates actor/critic jointly. - Integration layer (

PACSTrainer): ties it all into Stephanie, loads SICQL components, saves models, and logs stats to the database.

🔁 The PACS Training Loop

flowchart TD

A["📚 CaseBook"]:::casebook --> B["📊 RLVRDataset"]:::dataset

B --> C["👥 Sample Group"]:::sampling

C --> D["🧮 Compute r̂"]:::computation

D --> E["🔄 Compute ψ (RLOO)"]:::computation

E --> F["📉 Supervised Loss"]:::loss

F --> G["🔄 Update Policy"]:::update

G --> H{"⚖️ Coupling Ratio"}:::decision

H -->|"✅ Stable"| I["💾 Store Skill Filter"]:::success

H -->|"⚠️ Unstable"| J["🎛️ Adjust α"]:::adjustment

I --> A

J --> A

classDef casebook fill:#e1f5fe,stroke:#01579b,stroke-width:2px

classDef dataset fill:#f3e5f5,stroke:#4a148c,stroke-width:2px

classDef sampling fill:#fff3e0,stroke:#e65100,stroke-width:2px

classDef computation fill:#e8f5e9,stroke:#1b5e20,stroke-width:2px

classDef loss fill:#ffebee,stroke:#c62828,stroke-width:2px

classDef update fill:#e0f7fa,stroke:#006064,stroke-width:2px

classDef decision fill:#fce4ec,stroke:#880e4f,stroke-width:2px

classDef success fill:#e8eaf6,stroke:#283593,stroke-width:2px

classDef adjustment fill:#fff8e1,stroke:#ff6f00,stroke-width:2px

📜 Full Code Listing

🧭 Guide to the PACS Trainer Code

The PACS Trainer is the engine room of this whole post. It looks intimidating at first — over 300 lines of PyTorch, dataset classes, and training logic — but don’t worry: you don’t need to memorize every line. Instead, focus on these 5 landmarks:

RLVRDataset— Defines the smallest learning unit: a reasoning attempt wrapped in metadata. Think of it as the “atom” of the system.HybridSICQLAdapter.sample_group()— Generates groups of attempts to compare. This is where raw responses become structured trajectories.PACSTrainer._rhat_vector()— Computes normalized outcome rewards for each attempt. This is the start of turning feedback into math.PACSTrainer._psi_rloo()— Implements the RLOO (leave-one-out) advantage estimation. This is the heart of PACS: measuring improvement across a sequence of attempts.PACSTrainer.train()— The conductor. It brings everything together: dataset, policy, verifier, and update loop.

If you keep these functions in mind as you scan through the code, the whole system will click into place. The rest is supporting machinery (logging, batching, configs) that makes it run at scale.

Here is the complete implementation:

# stephanie/scoring/training/pacs_trainer.py

from __future__ import annotations

import os

import json

import copy

from datetime import datetime

from stephanie.scoring.scorable_factory import TargetType

import torch

import torch.nn as nn

import torch.nn.functional as F

import numpy as np

from typing import Dict, Any, Optional, Callable, List

from stephanie.scoring.training.base_trainer import BaseTrainer

from stephanie.models.training_stats import TrainingStatsORM

from stephanie.models.model_version import ModelVersionORM

from stephanie.models.belief_cartridge import BeliefCartridgeORM

from stephanie.analysis.scorable_classifier import ScorableClassifier

from stephanie.scoring.scorer.sicql_scorer import SICQLScorer

import random

from dataclasses import dataclass

# ==============================

# Core RLVR data structures

# ==============================

@dataclass

class RLVRItem:

"""Minimal data structure for PACS training samples"""

query: str

meta: Optional[Dict[str, Any]] = None

class RLVRDataset(torch.utils.data.Dataset):

"""Dataset of RLVRItems that can be sampled in batches"""

def __init__(self, items: List[RLVRItem]):

self.items = items

def __len__(self) -> int:

return len(self.items)

def __getitem__(self, idx: int) -> RLVRItem:

return self.items[idx]

def sample(self, batch_size: int) -> Dict[str, List]:

"""Sample a batch of items with inputs and references"""

indices = random.sample(range(len(self)), min(batch_size, len(self)))

batch = [self.items[i] for i in indices]

return {

"inputs": [item.query for item in batch],

"refs": [item.meta.get("expected", "") if item.meta else "" for item in batch]

}

# ==============================

# PACS Configuration

# ==============================

@dataclass

class PACSConfig:

"""Configurable parameters for PACS training"""

score_mode: str = "critic" # "logprob" | "critic"

beta: float = 1.0 # scale for r̂ (logprob/logit delta)

group_size: int = 8 # samples per prompt

max_new_tokens: int = 256 # response generation length

temperature: float = 0.6 # sampling temperature

top_p: float = 0.96 # nucleus sampling parameter

lr: float = 1e-6 # learning rate

weight_decay: float = 0.01 # weight decay

grad_clip: float = 1.0 # gradient clipping norm

steps_per_reset: int = 200 # steps between reference model resets

pos_weight: float = 1.0 # class balancing for BCE

log_every: int = 10 # logging frequency

early_stopping_patience: int = 3

early_stopping_min_delta: float = 1e-4

# ==============================

# Weighted BCE Loss

# ==============================

class WeightedBCEWithLogits(nn.Module):

"""BCEWithLogitsLoss with class weighting"""

def __init__(self, pos_weight: float = 1.0):

super().__init__()

self.register_buffer("pos_weight", torch.tensor(float(pos_weight)))

def forward(self, logits: torch.Tensor, targets: torch.Tensor) -> torch.Tensor:

return F.binary_cross_entropy_with_logits(

logits,

targets,

pos_weight=self.pos_weight

)

# ==============================

# Hybrid SICQL Adapter (Critical Component)

# ==============================

class HybridSICQLAdapter:

"""

Adapter unifying actor (HF LM) and critic (SICQL InContextQModel).

- Actor: HuggingFace causal LM (text in → text out, uses tokenizer)

- Critic: SICQL InContextQModel (embeddings in → Q/V/π outputs)

"""

class HybridSICQLAdapter:

def __init__(self, memory, actor_lm, tokenizer, critic_head=None, device=None):

self._device = torch.device(device or ("cuda" if torch.cuda.is_available() else "cpu"))

self.memory = memory

# --- Actor setup ---

self.actor = actor_lm.to(self._device)

self.actor.train()

# Reference actor (frozen)

self.actor_ref = copy.deepcopy(actor_lm).to(self._device)

self.actor_ref.eval()

for p in self.actor_ref.parameters():

p.requires_grad_(False)

# --- Critic setup (supports both SICQL + HuggingFace critics) ---

self.critic = critic_head.to(self._device) if critic_head is not None else None

self.critic_ref = None

self.critic_type = None

if self.critic is not None:

# Detect critic type

if hasattr(self.critic, "q_head") and hasattr(self.critic, "encoder"):

# Looks like SICQL InContextQModel

self.critic_type = "sicql"

self.critic.train()

self.critic_ref = type(self.critic)(

encoder=copy.deepcopy(self.critic.encoder),

q_head=copy.deepcopy(self.critic.q_head),

v_head=copy.deepcopy(self.critic.v_head),

pi_head=copy.deepcopy(self.critic.pi_head),

embedding_store=self.memory.embedding,

device=self._device,

).to(self._device)

self.critic_ref.load_state_dict(self.critic.state_dict())

self.critic_ref.eval()

for p in self.critic_ref.parameters():

p.requires_grad_(False)

else:

# Assume HuggingFace-style sequence classification model

self.critic_type = "hf"

self.critic.train()

self.critic_ref = copy.deepcopy(self.critic).to(self._device)

self.critic_ref.eval()

for p in self.critic_ref.parameters():

p.requires_grad_(False)

# --- Tokenizer setup ---

self.tok = tokenizer

if getattr(self.tok, "pad_token", None) is None:

if getattr(self.tok, "eos_token", None) is not None:

self.tok.pad_token = self.tok.eos_token

else:

self.tok.add_special_tokens({"pad_token": "[PAD]"})

self.actor.resize_token_embeddings(len(self.tok))

self.actor_ref.resize_token_embeddings(len(self.tok))

self.memory = memory

def device(self):

return self._device

# ---------- Actor (HF LM) ----------

@torch.no_grad()

def sample_group(self, prompt, group_size, max_new_tokens=256, temperature=0.6, top_p=0.95):

"""Generate multiple responses from the HuggingFace LM."""

inputs = self.tok(

prompt,

return_tensors="pt",

padding=True,

truncation=True,

max_length=1024

).to(self._device)

responses = []

for _ in range(group_size):

out = self.actor.generate(

**inputs,

do_sample=True,

temperature=temperature,

top_p=top_p,

max_new_tokens=max_new_tokens,

pad_token_id=self.tok.pad_token_id or self.tok.eos_token_id,

eos_token_id=self.tok.eos_token_id,

)

prompt_len = inputs["input_ids"].size(1)

response_ids = out[0, prompt_len:]

response = self.tok.decode(response_ids, skip_special_tokens=True)

responses.append(response)

return responses

def logprob_sum(self, prompt, response):

"""Compute logprob sum with online actor LM."""

return self._sum_logprobs(self.actor, prompt, response)

@torch.no_grad()

def logprob_sum_ref(self, prompt, response):

"""Compute logprob sum with frozen actor reference."""

return self._sum_logprobs(self.actor_ref, prompt, response).detach()

def _sum_logprobs(self, model, prompt, response):

"""Sum log probabilities for actor outputs."""

full_text = prompt + response

enc = self.tok(full_text, return_tensors="pt", truncation=True, max_length=2048).to(self._device)

input_ids = enc["input_ids"]

attention_mask = enc["attention_mask"]

outputs = model(input_ids=input_ids, attention_mask=attention_mask, labels=input_ids)

logits = outputs.logits

log_probs = F.log_softmax(logits, dim=-1)

shift_logits = log_probs[..., :-1, :].contiguous()

shift_labels = input_ids[..., 1:].contiguous()

token_log_probs = torch.gather(shift_logits, dim=-1, index=shift_labels.unsqueeze(-1)).squeeze(-1)

prompt_len = len(self.tok(prompt, return_tensors="pt")["input_ids"][0])

response_log_probs = token_log_probs[:, prompt_len - 1:]

return response_log_probs.sum(dim=1).squeeze()

# ---------- Critic (SICQL InContextQModel) ----------

def _critic_logit(self, model, prompt, response):

"""Compute Q-value logit from SICQL critic (InContextQModel)."""

if model is None:

raise ValueError("Critic head required for critic mode")

if self.memory is None:

raise ValueError("Memory with embedding system required for critic")

# Get embeddings

prompt_emb_np = self.memory.embedding.get_or_create(prompt)

response_emb_np = self.memory.embedding.get_or_create(response)

prompt_emb = torch.tensor(prompt_emb_np, device=self._device, dtype=torch.float32).unsqueeze(0)

response_emb = torch.tensor(response_emb_np, device=self._device, dtype=torch.float32).unsqueeze(0)

out = model(prompt_emb, response_emb)

return out["q_value"].squeeze()

def critic_logit(self, prompt, response):

return self._critic_logit(self.critic, prompt, response)

@torch.no_grad()

def critic_logit_ref(self, prompt, response):

return self._critic_logit(self.critic_ref, prompt, response).detach()

# ---------- Ref sync ----------

def hard_reset_ref(self):

"""Reset references to current models."""

self.actor_ref.load_state_dict(self.actor.state_dict())

self.actor_ref.eval()

if self.critic and self.critic_ref:

self.critic_ref.load_state_dict(self.critic.state_dict())

self.critic_ref.eval()

# ==============================

# PACS Trainer Core

# ==============================

class PACSCoreTrainer:

"""PACS trainer implementing RLVR via Supervised with RLOO regularization"""

def __init__(

self,

policy: HybridSICQLAdapter,

cfg: PACSConfig,

verifier: Callable[[str, str, Optional[Dict[str, Any]]], int],

logger: Optional[Callable[[Dict[str, Any]], None]] = None,

online_training: bool = False

):

"""

Args:

policy: Policy adapter (HybridSICQLAdapter)

cfg: PACS configuration

verifier: Function to verify (prompt, response, meta) → {0,1}

logger: Optional logging function

"""

self.policy = policy

self.cfg = cfg

self.verifier = verifier

self.logger = logger or (lambda d: None)

self.online_training = online_training

# Determine which parameters to train

if cfg.score_mode == "logprob":

params = self.policy.actor.parameters()

elif cfg.score_mode == "critic":

if self.policy.critic is None:

raise ValueError("critic_head required for score_mode='critic'")

params = self.policy.critic.parameters()

else:

raise ValueError("score_mode must be 'logprob' or 'critic'")

# Optimizer

self.optimizer = torch.optim.AdamW(

params,

lr=cfg.lr,

weight_decay=cfg.weight_decay

)

# Learning rate scheduler

self.scheduler = torch.optim.lr_scheduler.ReduceLROnPlateau(

self.optimizer,

mode="min",

factor=0.5,

patience=5

)

# Loss function

# Use BCEWithLogitsLoss to maintain numerical stability.

# The paper's Equation 1 shows the loss is computed with σ(ψ),

# but PyTorch's BCEWithLogitsLoss combines sigmoid + BCE in a numerically stable way.

self.loss_fn = WeightedBCEWithLogits(pos_weight=cfg.pos_weight)

# Training state

self._step = 0

self.best_loss = float("inf")

self.early_stop_counter = 0

# ---- Helpers ----

def _rhat_vector(self, prompt: str, responses: List[str]) -> torch.Tensor:

"""

Compute r̂ for each response in group based on chosen score_mode.

In both modes:

r̂ = β·(score_online - score_ref)

"""

vals = []

if self.cfg.score_mode == "logprob":

for r in responses:

lp = self.policy.logprob_sum(prompt, r) # requires grad

lpr = self.policy.logprob_sum_ref(prompt, r) # no grad

vals.append(self.cfg.beta * (lp - lpr))

else: # critic

for r in responses:

lg = self.policy.critic_logit(prompt, r) # requires grad

lgr = self.policy.critic_logit_ref(prompt, r) # no grad

vals.append(self.cfg.beta * (lg - lgr))

return torch.stack(vals) # (G,)

def _psi_rloo(self, rhat: torch.Tensor) -> torch.Tensor:

"""

Compute ψ using RLOO (leave-one-out) regularization.

ψ_i = r̂_i − mean_{j≠i} r̂_j

"""

G = rhat.numel()

if G <= 1:

return rhat

# Compute mean of all r̂ values

total = rhat.sum()

# Compute leave-one-out mean for each element

psi = rhat - (total - rhat) / (G - 1)

return psi

def _verify_labels(

self,

prompt: str,

responses: List[str],

meta: Optional[Dict[str, Any]]

) -> torch.Tensor:

"""Verify responses and return labels (0 or 1)"""

labels = []

for r in responses:

try:

labels.append(float(self.verifier(prompt, r, meta)))

except Exception as e:

print(f"Verification failed: {e}")

labels.append(0.0)

return torch.tensor(labels, dtype=torch.float32, device=self.policy.device())

def _log(self, d: Dict[str, Any]):

"""Log metrics with step counter"""

d = {"step": self._step, **d}

self.logger(d)

def _check_early_stopping(self, loss: float) -> bool:

"""Check if early stopping criteria are met"""

if loss < self.best_loss - self.cfg.early_stopping_min_delta:

self.best_loss = loss

self.early_stop_counter = 0

return False

self.early_stop_counter += 1

return self.early_stop_counter >= self.cfg.early_stopping_patience

# ---- Training API ----

def train(

self,

dataset: RLVRDataset,

max_steps: Optional[int] = None

) -> Dict[str, Any]:

"""

Train policy using PACS with RLOO regularization.

Args:

dataset: RLVRDataset containing training samples

max_steps: Maximum number of training steps

Returns:

Training statistics

"""

# Training metrics

metrics = {

"losses": [],

"psi_means": [],

"rhat_means": [],

"label_rates": [],

"acc_proxies": [],

"entropies": []

}

# Training loop

step = 0

while max_steps is None or step < max_steps:

try:

# Sample random item from dataset

item = dataset[random.randint(0, len(dataset) - 1)]

if self.online_training:

self._train_on_item(item, metrics)

else:

self._train_on_item_offline(item, metrics)

# Periodic reference model reset

if self.cfg.steps_per_reset and (self._step % self.cfg.steps_per_reset == 0):

self.policy.hard_reset_ref()

# Log metrics periodically

if self._step % self.cfg.log_every == 0:

self._log({

"loss": metrics["losses"][-1] if metrics["losses"] else 0.0,

"psi_mean": metrics["psi_means"][-1] if metrics["psi_means"] else 0.0,

"rhat_mean": metrics["rhat_means"][-1] if metrics["rhat_means"] else 0.0,

"label_pos_rate": metrics["label_rates"][-1] if metrics["label_rates"] else 0.0,

"acc_proxy": metrics["acc_proxies"][-1] if metrics["acc_proxies"] else 0.0,

"mode": self.cfg.score_mode,

"entropy": metrics["entropies"][-1] if metrics["entropies"] else 0.0

})

step += 1

self._step += 1

except Exception as e:

print(f"Training error at step {self._step}: {e}")

continue

# Return training statistics

return {

"avg_loss": float(np.mean(metrics["losses"])) if metrics["losses"] else 0.0,

"avg_psi": float(np.mean(metrics["psi_means"])) if metrics["psi_means"] else 0.0,

"avg_rhat": float(np.mean(metrics["rhat_means"])) if metrics["rhat_means"] else 0.0,

"pos_rate": float(np.mean(metrics["label_rates"])) if metrics["label_rates"] else 0.0,

"acc_proxy": float(np.mean(metrics["acc_proxies"])) if metrics["acc_proxies"] else 0.0,

"entropy": float(np.mean(metrics["entropies"])) if metrics["entropies"] else 0.0,

"steps": self._step

}

def _train_on_item_offline(self, item: RLVRItem, metrics: Dict[str, list]) -> None:

"""

Train directly on dataset rewards (offline supervised PACS).

Uses RLVRItem.prompt, RLVRItem.response, and RLVRItem.reward

as the training signal without generating new responses.

"""

prompt = item.prompt

response = item.response

reward_val = float(item.reward)

# Convert reward into tensor

reward = torch.tensor([reward_val], device=self.policy.device(), dtype=torch.float32)

# --- Compute r̂ (online vs reference) ---

if self.cfg.score_mode == "logprob":

lp = self.policy.logprob_sum(prompt, response) # requires grad

lpr = self.policy.logprob_sum_ref(prompt, response) # no grad

rhat = self.cfg.beta * (lp - lpr)

else: # critic mode

lg = self.policy.critic_logit(prompt, response) # requires grad

lgr = self.policy.critic_logit_ref(prompt, response) # no grad

rhat = self.cfg.beta * (lg - lgr)

psi = rhat.unsqueeze(0) # keep batch dimension

# --- Compute supervised loss ---

loss = self.loss_fn(psi, reward)

# --- Optimization ---

self.optimizer.zero_grad(set_to_none=True)

loss.backward()

if self.cfg.grad_clip:

torch.nn.utils.clip_grad_norm_(

self.optimizer.param_groups[0]["params"],

self.cfg.grad_clip

)

self.optimizer.step()

self.scheduler.step(loss.item())

# --- Metrics ---

with torch.no_grad():

psi_val = float(psi.item())

pred = (torch.sigmoid(psi) > 0.5).float()

acc_proxy = float((pred == reward).float().mean().item())

metrics["losses"].append(float(loss.item()))

metrics["psi_means"].append(psi_val)

metrics["rhat_means"].append(float(rhat.item()))

metrics["label_rates"].append(reward_val)

metrics["acc_proxies"].append(acc_proxy)

# --- Log ---

self._log({

"mode": f"{self.cfg.score_mode}-offline",

"loss": float(loss.item()),

"psi": psi_val,

"rhat": float(rhat.item()),

"reward": reward_val,

"acc_proxy": acc_proxy,

"prompt_snippet": prompt[:80] + ("..." if len(prompt) > 80 else "")

})

def _train_on_item(self, item: RLVRItem, metrics: Dict[str, list]) -> None:

"""Train on a single dataset item and update metrics"""

prompt = item.prompt

meta = item.meta

# Generate response group

responses = self.policy.sample_group(

prompt,

group_size=self.cfg.group_size,

max_new_tokens=self.cfg.max_new_tokens,

temperature=self.cfg.temperature,

top_p=self.cfg.top_p,

)

# Verify responses

labels = self._verify_labels(prompt, responses, meta)

# Compute r̂ and ψ

rhat = self._rhat_vector(prompt, responses) # (G,)

psi = self._psi_rloo(rhat)

# Compute loss

loss = self.loss_fn(psi, labels)

# Detach psi for component calculations

psi_detached = psi.detach()

psi_sigmoid = torch.sigmoid(psi_detached)

# Calculate actor component magnitude

with torch.no_grad():

# Actor component: policy improvement term

# [l(q, o; πθ)]∇θ log πθ(o|q)

cross_entropy_loss = -(labels * torch.log(psi_sigmoid + 1e-8) +

(1 - labels) * torch.log(1 - psi_sigmoid + 1e-8))

actor_component = cross_entropy_loss.mean().item()

# Critic component: reward estimation term

# (R(q, o) - σ(ψ(q, o; πθ)))∇θψ(q, o; πθ)

critic_raw = (labels - psi_sigmoid).mean().item() # Prediction error

critic_grad = psi.mean().item() # Gradient magnitude

critic_component = critic_raw * critic_grad # Full critic update

# Calculate coupling ratio (absolute values to handle sign)

coupling_ratio = abs(actor_component) / (abs(critic_component) + 1e-8)

# Track policy entropy for exploration analysis

policy_entropy = -torch.mean(psi_sigmoid * torch.log(psi_sigmoid + 1e-8) +

(1 - psi_sigmoid) * torch.log(1 - psi_sigmoid + 1e-8)).item()

sequence_entropy = self.policy.calculate_response_entropy(prompt, responses)

# Optimization

self.optimizer.zero_grad(set_to_none=True)

loss.backward()

# Gradient clipping

if self.cfg.grad_clip is not None:

torch.nn.utils.clip_grad_norm_(

self.optimizer.param_groups[0]["params"],

self.cfg.grad_clip

)

self.optimizer.step()

# Update learning rate

self.scheduler.step(loss.item())

# Compute metrics

with torch.no_grad():

psi_mean = float(psi.mean().item())

rhat_mean = float(rhat.mean().item())

label_pos_rate = float(labels.mean().item())

preds = (torch.sigmoid(psi) > 0.5).float()

acc_proxy = float((preds == labels).float().mean().item())

entropy = self.policy.calculate_response_entropy(prompt, responses)

grad_analysis = self._analyze_gradients(psi, labels)

metrics["actor_components"].append(grad_analysis["actor_component"])

metrics["critic_raws"].append(grad_analysis["critic_raw"])

metrics["critic_grads"].append(grad_analysis["critic_grad"])

metrics["critic_components"].append(grad_analysis["critic_component"])

metrics["coupling_ratios"].append(grad_analysis["coupling_ratio"])

metrics["binary_entropies"].append(grad_analysis["binary_entropy"])

metrics["losses"].append(float(loss.item()))

metrics["psi_means"].append(psi_mean)

metrics["rhat_means"].append(rhat_mean)

metrics["label_rates"].append(label_pos_rate)

metrics["acc_proxies"].append(acc_proxy)

metrics["entropies"].append(entropy)

metrics["policy_entropies"].append(policy_entropy)

metrics["sequence_entropies"].append(sequence_entropy)

metrics["response_entropy"].append(

self.policy.calculate_response_entropy(prompt, responses)

)

# Log metrics

self._log({

"loss": float(loss.item()),

"psi_mean": psi_mean,

"rhat_mean": rhat_mean,

"label_pos_rate": label_pos_rate,

"acc_proxy": acc_proxy,

"entropy": entropy,

"mode": self.cfg.score_mode,

"actor_component": actor_component,

"critic_component": critic_component,

"coupling_ratio": coupling_ratio,

**grad_analysis,

"policy_entropy": self.policy.calculate_response_entropy(prompt, responses)

})

def _analyze_gradients(self, psi: torch.Tensor, labels: torch.Tensor) -> Dict[str, float]:

"""Analyze gradient components as in PACS paper Equation 6"""

# Compute sigmoid once for efficiency

psi_sigmoid = torch.sigmoid(psi)

# 1. ACTOR component: policy improvement term

# [l(q, o; πθ)]∇θ log πθ(o|q)

cross_entropy_loss = -(labels * torch.log(psi_sigmoid + 1e-8) +

(1 - labels) * torch.log(1 - psi_sigmoid + 1e-8))

actor_component = cross_entropy_loss.mean().item()

# 2. CRITIC component breakdown

prediction_error = (labels - psi_sigmoid)

critic_raw = prediction_error.mean().item() # R - σ(ψ)

critic_grad = psi.mean().item() # ∇θψ

critic_component = (prediction_error * psi).mean().item() # Full critic update

# 3. Coupling ratio (normalized for stability)

coupling_ratio = abs(actor_component) / (abs(critic_component) + 1e-8)

# 4. Binary entropy (prediction confidence)

binary_entropy = -torch.mean(

psi_sigmoid * torch.log(psi_sigmoid + 1e-8) +

(1 - psi_sigmoid) * torch.log(1 - psi_sigmoid + 1e-8)

).item()

return {

"actor_component": actor_component,

"critic_raw": critic_raw,

"critic_grad": critic_grad,

"critic_component": critic_component,

"coupling_ratio": coupling_ratio,

"binary_entropy": binary_entropy

}

# Add to imports at the top

class PACSTrainer(BaseTrainer):

"""

PACS trainer that implements the RLVR via Supervised algorithm.

This integrates properly with SICQL infrastructure by:

- Using SICQL policy heads as critics

- Leveraging SICQL verifiers for labels

- Maintaining proper RLOO implementation

Key features:

- Two scoring modes: "logprob" (actor-based) and "critic" (SICQL-based)

- Proper RLOO (leave-one-out) regularization

- Reference model resets

- Safety mechanisms to prevent model collapse

"""

def __init__(self, cfg, memory, logger):

super().__init__(cfg, memory, logger)

self.cfg = cfg

self.memory = memory

self.logger = logger

# Device management

self.device = torch.device(

"cuda" if torch.cuda.is_available() else "cpu"

)

# Initialize configuration

self._init_config(cfg)

# Initialize ScorableClassifier for prompt type classification

try:

self.classifier = ScorableClassifier(

memory=self.memory,

logger=self.logger,

config_path="config/domain/seeds.yaml",

metric="cosine"

)

self.logger.log("ScorableClassifierInitialized", {

"message": "Successfully initialized ScorableClassifier for prompt type classification"

})

except Exception as e:

self.logger.log("ScorableClassifierError", {

"error": str(e),

"message": "Failed to initialize ScorableClassifier, falling back to simple classification"

})

self.classifier = None

# Track training state

self.best_loss = float("inf")

self.early_stop_counter = 0

self.trainer = None # Will be initialized in train()

self.pacs_core = None # Core PACS implementation

# Log initialization

self.logger.log(

"PACSTrainerInitialized",

{

"score_mode": self.score_mode,

"beta": self.beta,

"group_size": self.group_size,

"device": str(self.device),

},

)

def _init_config(self, cfg):

"""Initialize training parameters from config"""

self.score_mode = cfg.get("score_mode", "critic") # "logprob" or "critic"

self.beta = cfg.get("beta", 1.0)

self.group_size = cfg.get("group_size", 8)

self.max_steps = cfg.get("max_steps", 1000)

self.lr = cfg.get("lr", 1e-6)

self.early_stopping_patience = cfg.get("patience", 3)

self.early_stopping_min_delta = cfg.get("min_delta", 1e-4)

self.pos_weight = cfg.get("pos_weight", 1.0)

self.steps_per_reset = cfg.get("steps_per_reset", 200)

self.dimension = cfg.get("dimension", "alignment")

self.target_type = cfg.get("target_type", "document")

self.model_path = cfg.get("model_path", "models/pacs")

self.model_version = cfg.get("model_version", "v1")

def _get_verifier(self, dimension: str) -> Callable[[str, str, Optional[Dict]], int]:

"""Get verifier function for the given dimension"""

if dimension == "math":

from stephanie.scoring.verifiers import boxed_math_verifier

return boxed_math_verifier

elif dimension == "code":

from stephanie.scoring.verifiers import code_verifier

return code_verifier

else:

# Generic verifier that uses SICQL scores

def generic_verifier(prompt: str, response: str, meta: Optional[Dict] = None) -> int:

# In practice, this would use your SICQL system

from stephanie.scoring.scorable_factory import ScorableFactory

from stephanie.scoring.transforms.regression_tuner import RegressionTuner

# Create scorable

scorable = ScorableFactory.from_text(

response,

TargetType.DOCUMENT,

context=prompt

)

# Get score from SICQL

score = self.memory.scores.get_score(

goal_id=meta.get("goal_id") if meta else None,

scorable=scorable

)

# Use tuner if available

tuner = RegressionTuner(dimension=dimension)

if tuner.is_loaded():

normalized = tuner.transform(score.score if score else 0.0)

return 1 if normalized > 0.5 else 0

return 1 if (score.score if score else 0.0) > 0.5 else 0

return generic_verifier

def _build_policy_adapter(self, dimension: str):

"""Build policy adapter with SICQL integration"""

from transformers import AutoModelForCausalLM, AutoTokenizer

# Load base model (same as SICQL uses)

base_model = "Qwen/Qwen2.5-1.5B"

actor = AutoModelForCausalLM.from_pretrained(base_model)

tokenizer = AutoTokenizer.from_pretrained(base_model)

# For critic mode, load SICQL policy head

critic = None

if self.score_mode == "critic":

scorer = SICQLScorer(self.cfg, self.memory, self.logger)

critic = scorer.get_model(self.dimension)

# Create policy adapter

adapter = HybridSICQLAdapter(

memory=self.memory,

actor_lm=actor,

tokenizer=tokenizer,

critic_head=critic,

device=self.device

)

return adapter

def train(self, dataset, dimension: str = "alignment"):

"""

Train model using proper PACS algorithm with RLOO.

Args:

dataset: RLVRDataset containing queries and meta

dimension: Dimension to train on (for verifier selection)

Returns:

Training statistics and model metadata

"""

self.logger.log("PACSTrainingStarted", {"dimension": dimension})

# 1. Build policy adapter (with SICQL integration)

policy = self._build_policy_adapter(dimension)

# 2. Get verifier for this dimension

verifier = self._get_verifier(dimension)

# 3. Create PACS config

pacs_cfg = PACSConfig(

score_mode=self.score_mode,

beta=self.beta,

group_size=self.group_size,

max_new_tokens=256,

temperature=0.6,

top_p=0.96,

lr=self.lr,

weight_decay=0.01,

grad_clip=1.0,

steps_per_reset=self.steps_per_reset,

pos_weight=self.pos_weight,

log_every=10

)

# 4. Create core PACS trainer

self.pacs_core = PACSCoreTrainer(

policy=policy,

cfg=pacs_cfg,

verifier=verifier,

logger=self._log_metrics,

online_training=False

)

# 5. Train model

training_stats = self.pacs_core.train(dataset, max_steps=self.max_steps)

# 6. Save model and metadata

meta = self._save_model(policy, dimension, training_stats, dataset=dataset)

# 7. Log training stats

self._log_training_stats(dimension, meta)

self.logger.log(

"PACSTrainingComplete",

{

"dimension": dimension,

"final_loss": meta["avg_loss"],

"policy_entropy": meta["policy_entropy"]

},

)

return meta

def _log_metrics(self, metrics: Dict[str, Any]):

"""Log metrics from PACS core trainer"""

self.logger.log("PACSMetrics", metrics)

# Track for early stopping

if "loss" in metrics:

if metrics["loss"] < self.best_loss - self.early_stopping_min_delta:

self.best_loss = metrics["loss"]

self.early_stop_counter = 0

else:

self.early_stop_counter += 1

def _save_model(self, policy, dimension: str, training_stats: Dict[str, Any], dataset=None) -> Dict[str, Any]:

"""Save PACS model with metadata"""

# Create output directory

output_dir = os.path.join(self.model_path, dimension)

os.makedirs(output_dir, exist_ok=True)

# Save actor model

actor_path = os.path.join(output_dir, "actor")

policy.actor.save_pretrained(actor_path)

# Save critic if in critic mode

if self.score_mode == "critic" and policy.critic is not None:

critic_path = os.path.join(output_dir, "critic")

os.makedirs(critic_path, exist_ok=True)

# Save SICQL components

torch.save(policy.critic.encoder.state_dict(), os.path.join(critic_path, "encoder.pt"))

torch.save(policy.critic.q_head.state_dict(), os.path.join(critic_path, "q_head.pt"))

torch.save(policy.critic.v_head.state_dict(), os.path.join(critic_path, "v_head.pt"))

torch.save(policy.critic.pi_head.state_dict(), os.path.join(critic_path, "pi_head.pt"))

# Calculate policy metrics

policy_entropy = self._calculate_policy_entropy(policy, dataset, tokenizer=policy.tok)

policy_stability = self._calculate_policy_stability(policy, dataset=dataset)

# Build metadata

meta = {

"version": self.model_version,

"dimension": dimension,

"score_mode": self.score_mode,

"policy_entropy_calc": policy_entropy,

"policy_stability_calc": policy_stability,

"actor_model": "Qwen2.5-1.5B",

"critic_model": "SICQL" if self.score_mode == "critic" else None,

"beta": self.beta,

"group_size": self.group_size,

"avg_loss": float(training_stats["avg_loss"]),

"policy_entropy": training_stats["entropy"],

"policy_stability": training_stats["acc_proxy"],

"steps": training_stats["steps"],

"device": str(self.device),

"model_path": output_dir,

"timestamp": datetime.now().isoformat(),

"config": self.cfg if isinstance(self.cfg, dict) else self.cfg.__dict__

}

# Split into direct columns vs. extra_data

db_fields = {

"model_type": "pacs",